Contents:

With years of background in software development and an AI Group Leader role at MobiDev, I’m still captivated by the striking rise of this technology and its ramifications. Our team has managed to follow it closely and learned to apply innovations to address specific business challenges. At MobiDev, we identify trends that can deliver benefits now, versus those yet to reach their full potential.

In this article, we are going to shed light on the latest trends in machine learning (ML), and how they can drive business growth and provide tangible value. From GenAI creating complex multimedia content to the rise of small language models (SLM) – the trends listed below will be in the headlines a lot in 2026.

Trend #1. The rise of generative models for complex content generation

Generative models have already altered text-based tools like OpenAI’s GPT models. But, the burgeoning interest in these models is not solely confined to written language. Now they encompass a diverse array of content types, including graphics, video, and music. This shift is expected to further expand at a CAGR of 37.6% from 2025 to 2030, enhancing artistic expression and practical applications.

Let’s explore the most prominent examples:

1. GenAI in visual arts

One of the great examples is Stable Diffusion which reached significant advancement in text-to-image synthesis. Unlike traditional diffusion models that work in image space, this tool operates in a compressed latent space, allowing for a more efficient generation of high-resolution images. Another noteworthy tool is Muse, created by the tech giant Google. It acts as a collaborative partner for artists augmenting their creative workflow. Imagen, another GenAI model from Google, focuses on photorealistic imagery. Utilizing a combination of advanced diffusion processes, Imagen translates textual descriptions into strikingly realistic images.

2. GenAI in video production

Models like Synthesia and Runway ML are leading the charge. Synthesia allows artists to create realistic AI-generated videos with lifelike avatars interpreting content in multiple languages. The scope of implications this tech enables is immense. This is particularly true in fields like education, marketing, and entertainment, where personalized video content is generally produced on a massive scale. Another great example is Runway ML. With its help, creators can manipulate video elements while streaming, delivering real-time editing and effects that were once the domain of high-budget productions.

3. GenAI in the music industry

Tools like Nvidia’s Fugatto and Fluxmusic help compose original music across various genres and styles via a prompt. Fugatto employs a “style transfer” method to assimilate and blend characteristics from different musical styles. It can thus create unique new pieces that reflect the intricacies of original genres. This capability offers composers a space for experimentation.

Fluxmusic is another innovative player in the realm of AI-driven music generation, focusing on the fluidity and adaptability of musical creation. This model stands out for its emphasis on real-time interaction, designed to create ambient and generative soundscapes. This feature makes the tool perfect for composers working with film, gaming, and therapy, where atmosphere and mood are paramount.

However, we should stay vigilant about the ethical and societal impact of how we use generative models. Concerns regarding copyright, authenticity, and misuse arise and must be addressed. It’s worth remembering that technologies should serve as tools for enhancement rather than disruption.

How to implement this trend

- Identify the user needs for AI-generated content

- Determine the resource limitations of your system

- Identify potential use cases or topics that should not be addressed using the developed approach

- Develop the model

Trend #2. Shifting from LLMs to SLMs

The rise of domain-specific language models has inspired a key realization — when it comes to model size, bigger isn’t always better. Although large language models (LLMs) have fueled GenAI, they still pose significant constraints. Training and deploying models on hundreds of billions of parameters requires enormous financial resources and server infrastructure. This is something only a handful of tech giants can afford. Further, researchers at the University of Washington state that a typical day of ChatGPT usage can match the daily energy consumption of 33,000 U.S. households.

Smaller language models (SLMs) present a sustainable alternative. They deliver impressive outcomes with a much less resource-intensive effort. For example, Qwen is a lightweight SLM that can run effectively on devices with limited processing power. This facilitates the integration of ML in everyday technologies, from mobile apps to IoT devices.

Another good example is Pythia, built to facilitate research and experimentation in the field of NLP. Its architecture incorporates a variety of components that can be independently modified. Hence, researchers can tailor the model to specific tasks or datasets.

Research shows that smaller models trained on larger, more diverse datasets often outperform larger counterparts trained on limited data. This has pushed the trend of maximizing output with fewer parameters.

What other merits do SMLs possess?

- Smaller models require less hardware to operate, making them cost-effective. This paves the way for a broader range of individuals and organizations to play their part in ML development and usage. As smaller enterprises, with fewer educators, and researchers — these models empower diverse communities to innovate and improve existing systems.

- SLMs open up possibilities for applications like edge computing and IoT (Internet of Things). Running models on smaller devices, such as a smartphone or laptop, boosts performance in decentralized scenarios. In addition, without the need to send sensitive data to external servers, users gain greater control over their data security.

- The smaller the model, the easier it is to trace and understand how it makes decisions. LLMs are immensely complex, often operating as “black boxes,” making it challenging to pinpoint the reasoning behind outputs. This is where technology trust issues take root. Explainability is critical for building trustworthy systems that operate transparently and are amenable to continuous improvement.

How to implement this trend

- Identify resource and time limitations

- Select an SLM model that aligns with these limitations

- Train the model and identify topics where it exhibits hallucination

- Deploy the model

Trend #3. GPUs for accelerated model training

Graphics Processing Units (GPUs) form the backbone of machine learning training due to their speed and efficiency. Advanced GPUs process datasets and train complex models even faster than traditional CPUs. They use sequential computing, processing one prompt at a time requiring each task to be fulfilled before proceeding to the next.

Why GPUs are essential? GPUs can handle simultaneous operations, which is necessary for large-scale ML tasks. Also, for businesses reliant on ML, GPUs can help cut down training times and cloud computing expenses. Cloud-based GPU solutions are now available on the market. So businesses can decide between GPU on-premise or cloud depending on scalability needs.

We feel it’s important to note here that rising cloud computing costs overlap with declining hardware availability (this is another reason for the shift toward smaller models driven by both necessity and ambitious business goals). Hardware shortage has put significant pressure on the industry to ramp up GPU production. It also unveiled the need to develop more affordable and efficient hardware solutions, which are simpler to manufacture and deploy. As of today, much of the computational burden rests with cloud providers. Since hardware shortages add more challenges and expenses to on-premise server setups, cloud providers should get ready to revamp and optimize their systems to meet the growing demands of GenAI.

How to implement this trend

- Determine the compute resources required for model training

- Set up the GPU machine

- Prepare the AI model for training

- Train and deploy the model

Trend #4. Optimized computing for better model performance

Edge computing is a key in distributed computing frameworks, accelerating processing speeds and enabling real-time analysis. This approach reduces bandwidth use and latency. With these two parameters minimized, data is better optimized to be transferred to centralized systems for further processing. Tools like Google Cloud platforms, prove the capabilities of edge computing by making remote workspaces more efficient.

Edge ML is ensuring resiliency across industries. Here’s how:

- In healthcare: ML-based wearable devices deliver real-time insights into patient’s essential health indicators (blood pressure, heart rate, etc.) paving the way for early medical interventions.

- In autonomous vehicles: data collected by edge biometrics technologies (sensor cameras) vehicles adapt to deviating road situations without centralized servers. One notable example is the integration of computing systems within 5G Points of Presence (PoPs), like Verizon’s 5G Edge platform, that boosts vehicle intelligence.

- In manufacturing: machines equipped with advanced analytics help identify potential malfunctions, and with predictive maintenance, schedule necessary repairs before issues escalate. This minimizes downtime and lowers operational costs.

- In retail: technologies like smart shelves help retailers monitor product stock accurately. They then help optimize store layouts facilitating store workers’ operational load.

However, edge ML can’t handle complex problems without advanced tools. That is why quantum computing is gaining traction in ML. Quantization models available on the Hugging Face platform reduce memory usage and computational demands via lower-precision data types (8-bit integers (int8)) for weights and activations. This way, larger models can fit into memory while accelerating inference processes.

While these distinct technologies were designed for different needs, they hold immense potential for collaboration. Merging the computational strength of Quantum Computing with the processing capabilities of Edge Computing, industries can address a broader spectrum of complex scenarios. For instance, quantum algorithms implemented at the edge can deliver real-time insights for informed decision-making. Similarly, the data produced at the edge can train and refine quantum machine learning models, boosting the effectiveness of both technologies. This synergy could facilitate quantum-inspired machine learning forecasts directly at the edge. This would provide near-instant analysis vital for finance, retail, etc.

How to implement this trend

- Identify the need to reduce model size due to resource constraints

- Select a model size reduction approach, such as quantization

- Optimize the initial model and deploy it

Trend #5. Automated machine learning for speed and cost-cutting

AutoML automates critical stages of the data science workflow. It streamlines processes like data preparation, feature engineering, model selection, hyperparameter tuning, etc. Users can train models directly from raw data and then deploy these models with just a few clicks. This makes advanced machine learning feasible even for those lacking deep technical knowledge.

Why is this trend important? Given the shortage of ML specialists on the market, companies spend a tremendous amount of resources to implement their solutions in real-world scenarios. This is because machine learning pipelines were designed and executed manually by experts. Whether intentionally or not, they created a steep barrier for non-experts. AutoML makes the process simpler for both novices and experienced developers.

Note that AutoML doesn’t render data scientists or ML engineers obsolete. Instead, it assists them with task automation within ML pipelines so that they can focus on higher-value activities (interpreting results, fine-tuning models, etc.)

By leveraging AutoML, businesses can prioritize growth and innovation rather than spending time on the minutiae of ML model creation and training. AutoML platforms have already proven to be useful in some industries.

- In agriculture, they facilitate product quality testing processes.

- In cybersecurity, they monitor data flows for malware, and spam, preventing cyber threats.

- In entertainment, they optimize content selection and recommendation engines.

- In marketing, they enhance behavioral marketing campaigns via recommendations and predictive analytics, ultimately improving engagement rates.

- In retail, they streamline inventory management to cut costs and boost profits.

How to implement this trend

- Ensure your task has no complex dependencies and does not require custom training

- Develop the approach and monitor its performance to enable timely adjustments

Trend #6. Multimodal machine learning

Multimodal ML represents a leap forward from traditional single-mode data processing. This technology integrates multiple input types like text, images, and sound. This approach mimics the human ability to process sensory information, opening new doors for interaction and innovation. Here’s a brief outlook of the tasks these models perform:

- Visual Question Answering (VQA) and Visual Reasoning: VQA employs computer vision to interpret images. Meanwhile, visual reasoning enables the system to infer relationships, compare objects, and understand details from context.

- Document Visual Question Answering (DocVQA): DocVQA equips ML to “read” and interpret documents directly from images. With the help of computer vision and NLP, it extracts specifics about the document’s content and layout.

- Image Captioning: merging vision and language, it allows for the descriptive interpretation of an image. For instance, it scans a photo and summarizes it as “a sunset over a calm sea” or “a busy marketplace”. This ML can tell stories about the visual world, one picture at a time.

- Image-Text Retrieval: this task links images to their corresponding descriptions, acting as a search engine that handles visuals and text interchangeably. This technology delivers detailed textual descriptions of images.

- Visual Grounding: this task links language to specific parts of an image. For instance, when asked, “Where’s an orange in the fruit bowl?” it will identify and highlight the orange. This enables precise connections between visual elements and their linguistic references.

- Text-to-Image Generation: this method creates images based on written descriptions, ranging from realistic depictions to imaginative abstractions.

Basically, multimodal ML processes diverse inputs and produces more informed, nuanced responses. Whether applied in education, transportation, or content generation, it is setting the stage for systems aligned with human needs and complexities. The future of ML lies in its ability to connect modalities, and multimodal systems are leading the way.

How to implement this trend

- Identify the necessity of using multimodal models

- Ensure your system can support such models within its limitations

- Implement and deploy the multimodal model

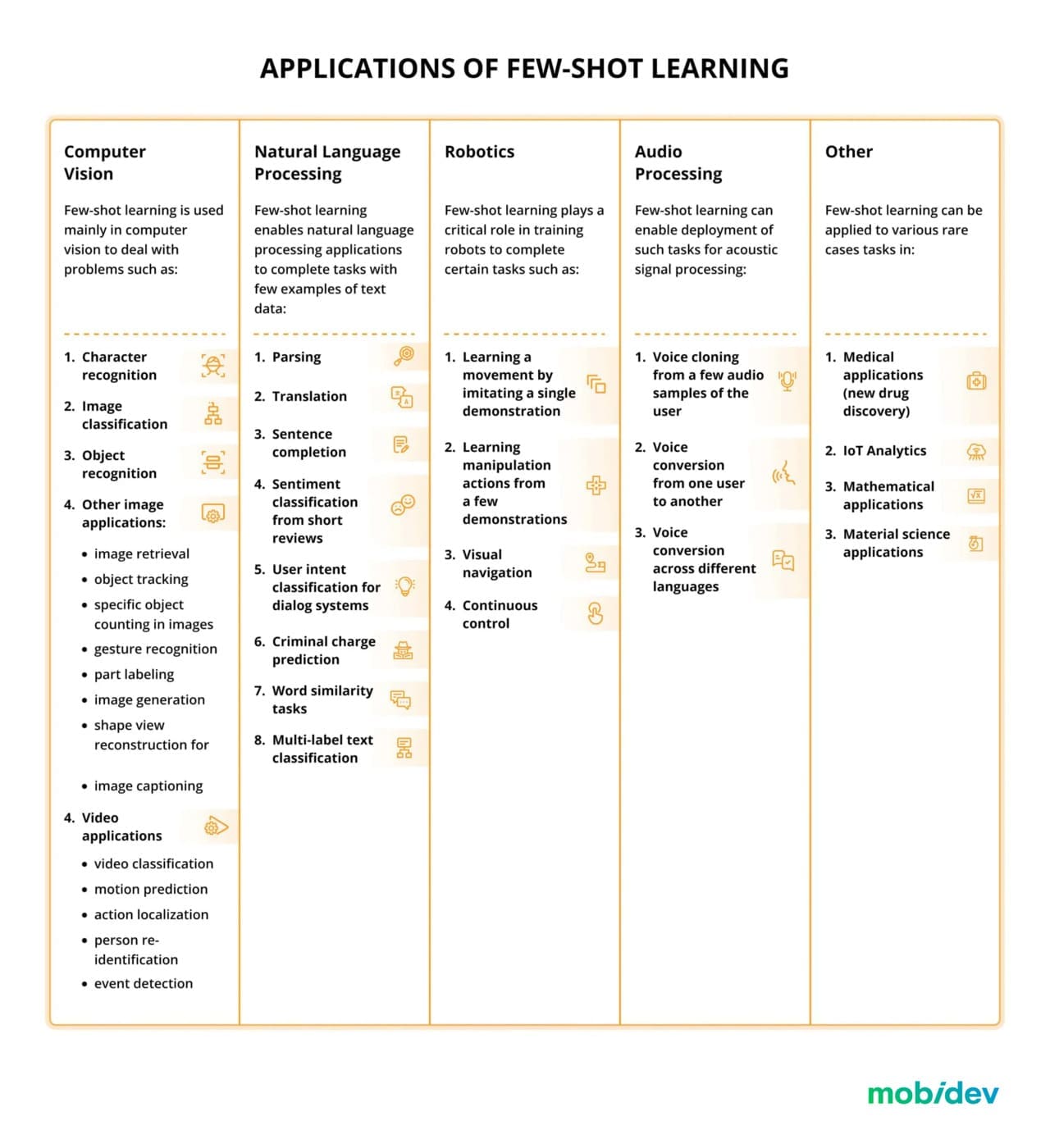

Trend #7. Few-shot & zero-shot prompting

Few-shot and zero-shot prompting methods leverage LLMs to perform a wide variety of tasks. Since they work with minimal or no task-specific training data, they address challenges in data scarcity and adaptability.

Few-shot prompting mechanisms work as follows: the user presents several examples of input-output illustrating the desired behavior. For instance, let’s say a text classification task contains sentences labeled as “positive” or “negative”. The model uses these labeled examples to discern patterns/cues informing about the task.

Zero-shot prompting relies on the “understanding” gained from pre-training on data. This method uses natural language instructions instead of specific examples. For instance, it can sort text as “spam” or “not spam” without needing examples of spam emails.

Why do these methods matter in real-world applications? Many real-world scenarios lack access to comprehensive, labeled datasets for every likely outcome. These techniques empower models to adapt to new tasks without large-scale data labeling. In fields like healthcare, customer service, and resource management, where new data classes emerge frequently, this approach can be a game-changer. For instance, few-shot learning for imaging helps enhance medical scan analysis speed and robustness. It represents a shift toward versatile ML applications that evolve alongside varying needs.

How to implement this trend

For prompting:

- Identify the necessity of few-shot or zero-shot prompting

- Ensure compatibility with prompting approaches

- Develop and fine-tune the prompts

- Test and evaluate

- Deploy the prompting solution

Trend #8. Reinforcement learning

Reinforcement learning (RL) stands out among machine learning algorithms for its unique approach to learning via interaction. With supervised learning, fixed data guides the process. RL, however, operates in unsupervised environments with limited instruction. This iterative process makes RL adaptable. It can excel not only in gaming, but also in fields like robotics and healthcare. At its core, RL mirrors human traits like evolution and decision-making, altering the way machines learn and interact.

Reinforcement learning shines across various industries:

- In robotics: RL equips robots to function in unstructured settings. They can grasp objects, navigate the environment, and execute complex movements.

- In finance: RL assists in portfolio management, algorithmic trading, risk assessment, etc. Via rapid data analysis, algorithms forecast market responses helping asset managers adapt to volatile circumstances.

- In healthcare, RL helps personalize treatments and enhance patient care, and drug discovery. It can tailor care plans based on individual patient data for better treatment outcomes.

Another way to train ML is using Reinforcement learning from human feedback (RLHF) which uses human input to train a “reward model”. This model is then employed during the RL process to enhance the agent’s performance.

RLHF is handy for tasks with objectives that are complex, vague, or challenging for algorithms to define. For instance, defining “funny” in precise mathematical terms is nearly impossible (programmatically). Evaluating and rating jokes created by LLM is easy though. The model converts human feedback into a reward function, which then tells the LLM to improve joke-writing capabilities.

The hottest trend in RL so far is fine-tuning large vision-language models (VLMs). Scientists provide a task description and prompt the VLM to generate chain-of-thought (CoT) reasoning. The CoT approach facilitates the exploration of intermediate steps that lead to the desired text-based action. Subsequently, the output is converted into executable actions to interact with the environment which then delivers task-specific rewards. These rewards help fine-tune the entire model through RL.

How to implement this trend

- Identify the necessity of using a reinforcement learning (RL) system for your task

- Ensure your system has the resources and infrastructure to support RL training and execution

- Design and implement the RL system, including defining the environment, reward function, and policy structure

- Train the RL system and evaluate its performance in the target environment

- Deploy the trained RL system and monitor its performance for necessary adjustments

Trend #9. MLOps

Despite rapid ML evolution, many data scientists face persistent challenges when building exceptional models. A staggering 80% of these projects never make it to deployment. The thing is that creating a robust ML model is just one aspect of the broader workflow. Without successful deployment, monitoring, and proper maintenance, models fail to achieve their full potential. This is where Machine Learning Operations enter the game.

MLOps practices, when incorporated correctly, allow organizations to automate critical aspects of the ML lifecycle, up to post-deployment improvements. This automation simplifies the integration of ML solutions into broader software development pipelines.

Here’s how MLOps enhances ML workflows:

- Automated workflows translate into significant cost savings for strategic resource allocation.

- Features for tracking data usage, model changes, and access permissions ensure all stakeholders adhere to governance standards.

- A unified platform for data scientists, software developers, and IT teams to work together facilitates the consistent movement of models from development to production.

- Every stage of the ML lifecycle, from data preparation to model deployment, is meticulously managed, resulting in better oversight of complex ML projects.

Why MLOps is extremely relevant right now? Since forecasts imply the use of machine learning models in production settings will expand, MLOps may just serve as a key driver of this growth. Achieving meaningful results requires more than just sophisticated algorithms. One of the top platforms for managing ML models is MLflow. It provides comprehensive frameworks for experimentation and deployment facilitating reproducibility and scalability for complex ML projects. MLflow consists of four key components:

- Tracking: users log in and monitor ML training processes (runs) and perform queries using Java, Python, R, or REST APIs

- Models: a standardized format for packaging and reusing models across environments and pipelines

- Model Registry: a centralized system for managing models and their lifecycle via organized versioning and governance

- Projects: packaging the code used in data science tasks for seamless reuse

How to implement this trend

- Identify the need for MLOps by evaluating the complexity of your ML workflows, the frequency of updates, and the scale of deployment.

- Choose tools and platforms for version control, CI/CD pipelines, model monitoring, and resource management

- Configure a collaborative environment with version control for code, data, and model artifacts.

- Develop CI/CD pipelines to automate data preprocessing, model training, testing, and deployment.

- Deploy tools for monitoring model performance and data drift, and establish feedback loops for continuous improvement.

- Deploy the finalized pipeline and establish processes for ongoing maintenance and scalability.

Trend #10. Low-code/no-code machine learning

No-code ML platforms allow users to create models and generate predictions without the need to write code. Traditional ML requires a skilled data scientist and knowledge of programming languages (Python). A data scientist acts as follows:

- manually prepares data

- perform feature engineerings

- allocate a portion of the data for training and tuning

- deploying the model into a production environment.

With the help of no-code platforms, all users, not just data scientists, can design and deploy machine learning models quickly. It’s different from AutoML as well. AutoML facilitates certain processes, like data preparation and algorithm selection. But it still requires expertise from data scientists. No-code ML eliminates the reliance on specialized knowledge. Yes, it does speed up the app development process. But it also has its own set of limitations.

- Standardized API interactions simplify workflows, but restrict functionality, especially when businesses need custom solutions.

- Developers may also face difficulties due to the lack of capabilities like automated testing, version control, and code reviews.

- Ml platforms work well for simple, event-driven tasks while struggling with high data volumes or complex workflows.

- Integrations based on prebuilt connectors on a low-code/no-code platform are heavily dependent on the platform itself, which risks vendor lock-in.

- Low-code/no-code platforms often apply a usage-based pricing model, creating unpredictable expenditures.

How to implement this trend

- Ensure your task has no complex dependencies and does not require custom training.

- Develop the approach and monitor its performance to enable timely adjustments.

Trend #11. Retrieval-augmented generation

Retrieval-augmented generation acts as a bridge between a user’s input and the LLM output. Instead of relying solely on existing training data, a RAG-supported application retrieves additional, high-quality information. This comes in handy in enterprise settings reliant on precise information. It thus delivers accurate answers and avoids ML hallucinations (false or fabricated outputs).

Hallucination happens when a model generates a factually incorrect, irrelevant, or inconsistent output. These inaccuracies arise either because LLMs rely on static training data or fail to understand the user query. RAG, though aimed at this problem, can still encounter similar challenges. It happens when RAG relies solely on unstructured and generalized internal data. For example, an airline chatbot might provide outdated fare details if the augmented data doesn’t include updated payment policies.

However, with GenAI Data Fusion, RAG can integrate information from multiple sources (Customer 360, Product 360 data, etc.). This enables the generation of highly accurate and context-specific prompts. One example of this approach is K2View’s data product platform. It enables RAG to stream and unify data from diverse sources via APIs or change data capture (CDC). Businesses thus can deliver personalized and accurate responses across a variety of use cases, ranging from hyper-personalized marketing to fraud monitoring.

How to implement this trend

- Identify the need for an RAG system.

- Ensure your system supports necessary components like a vector database and language model.

- Configure the vector database for document indexing and retrieval.

- Integrate the retriever with the language model.

- Deploy and monitor the RAG system for performance and accuracy.

Trend #12: Ethical and explainable models

Debates over whether ML models ensure fairness, accountability, and transparency grow along with the wider usage of this technology. This in turn pushes the trend toward explainable model adoption. Its focus is to create transparent models, allowing users to trust and justify their outputs.

Why is this trend important? The rising use of ML models for content generation raises ethical concerns over its ability to generate harmful or biased outputs. Explainable machine learning helps understand how decisions are made, instilling trust into complex systems.

Two notable techniques for explaining ML decisions are SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations):

- SHAP framework is based on Shapley values, a method for fair payout distribution among players. In the context of ML, the “players” are the features of the model, and the “payouts” represent the model output.

- LIME slightly perturbs the input data to observe changes in predictions. It creates a local linear model that can shed light on how complex models make decisions.

Explainable machine learning possesses several features that can help ensure responsible use of models:

- Unlike traditional “black box” ML models, explainable models outline how output decisions were made.

- Explainable ML helps organizations meet the requirements of regulations (like GDPR) and articulate how automated decisions are reached.

- Since these models are more transparent, data scientists can better examine their work, pinpoint biases or errors, and validate them with greater accuracy.

How to implement this trend

- Identify the need for explainable AI in your system.

- Choose appropriate explainability techniques

- Integrate the techniques into your model pipeline.

- Test and deploy the explainable AI system

Trend #13. Agentic AI

With a 44% CAGR, AI Agents market is projected to reach $47.1B in 2030. This alone is a clear signal that many companies are ready to invest in this technology because they see a business value in it. So what are AI Agents?

In a nutshell, Agentic AI is a system with AI at its core that can reason, make decisions, and act upon them using tools that are part of this system. AI agents are highly independant and need minimal control and input from humans.

Similar to generative AI, agents can be applied in many different areas of business. However, unlike that of gen AI, their primary function is to independently complete multistep tasks. They are great for process automation and optimization of workflow, especially those that require a lot of manual and tedious work.

AI Agent use cases include:

- Smart Chatbots and Voice Assistants that can complete tasks beyond answering simple FAQs. For example, check the availability of an item in a particular location.

- Predictive Analytics & Anomaly detection: AI Agents can initiate a deeper research from other data bases upon finding anomaly trends in their immediately available data.

- Procurement: agents can analyze the aveaible stock, historical demand, and current market state, to initiate replenishment request.

How to Implement This Trend

- Think about the goal of AI agent implementation, for example, reduce waiting for support response.

- Identify the use cases for AI agent implementation that will have the highest impact on your business or workflows within the product.

- Assess your tech readiness and data readiness for AI Agents.

- Evaluate your talent gap and check for the ways to close it, for example with team augmentation.

- Assess feasibility of AI agents and set clear KPIs.

Industry-specific machine learning trends

From custom treatment plans in healthcare to inventory optimization in retail, ML swiftly penetrates industries.

1. In retail, machine learning enhances personalization and operational efficiency:

- Retailers use ML algorithms to analyze customer data, delivering tailored product recommendations and targeted marketing campaigns that boost customer engagement.

- AI-driven demand forecasting helps maintain optimal inventory levels, reducing costs from overstocking or stockouts.

- AI-powered retail chatbots streamline customer support, providing instant responses and improving shopping experiences.

2. In healthcare, ML helps optimize patient care and operations:

- Google’s DeepMind analyzes electronic health records (EHRs) to forecast health risks and refine treatment plans.

- ML algorithms spot anomalies in X-rays, MRIs, and CT scans, aiding early disease diagnosis, for example, cancer detection.

3. In fintech, ML drives smarter financial solutions:

- PayPal monitors user activities to identify suspicious patterns, minimizing fraud.

- Wealthfront’s robo-advisor customizes investment strategies based on client goals.

4. In logistics & transportation, ML simplifies route planning and vehicle performance:

- UPS reduces delivery times and costs with ML-driven route planning.

- Amazon employs ML to forecast inventory needs and ensure order fulfillment.

5. In travel & hospitality, ML transforms guest experiences:

- Airbnb and similar platforms offer housing tailored to user preferences.

- Hilton Hotel adjusts room rates with ML to match demand trends.

6. In manufacturing & supply chain, ML drives productivity while reducing costs:

- Tools like those from General Electric spot equipment issues early, preventing manufacturing line withholds.

- ML can also help ensure optimal stock levels for improved supply chain efficiency.

7. In media & entertainment, ML enhances content delivery:

- Netflix and Spotify offer content based on individual preferences.

- ML-powered analysis enriches ad strategies and user engagement.

8. In energy, oil & gas, ML elevates resource efficiency:

- Chevron uses ML to detect pipeline issues, minimizing downtime and disruptions.

- NextEra Energy predicts renewable energy outputs to refine resource management.

Future of machine learning in 2026 and beyond

According to Fortune Business Insights, the ML market is poised to grow from $26 billion in 2023 to over $225 billion by 2030. Summing up the trends we listed above and looking ahead, we expect the ML market to

- Deliver advanced conversational agents to assist and engage in dialogues with customers

- Automate more manufacturing and logistics processes for effective supply chains globally

- Provide a more structured approach to ethics, adopting ethical guidelines for decision-making algorithms

- Contribute to sustainable efforts in energy consumption, improve agriculture with precision farming, assist in disaster response via predictive modeling, etc.

- Leap toward human-machine collaboration with co-piloting models, where ML complements human decision-making.

Because industries are in a rush to adopt ML, an issue looming on the horizon is the acute shortage of skilled data scientists and engineers. According to a report by the World Economic Forum, the demand for experts in the field will outstrip supply by 85 million jobs by 2030. The shortage could slow down potential growth if not addressed. What companies can do is educate and train their staff internally or resort to outsourced agencies for expert ML consulting or full-fledged ML development.

Leverage MobDev’s ML expertise to build your AI product

MobiDev’s engineers have been providing clients with high-quality software solutions since 2009 and supporting them with specialized AI solutions since 2018. We can help you integrate pre-trained models into your systems or create custom solutions tailored to your specific needs. You can also benefit from our:

- In-house AI labs involved in the research and development of complex AI solutions

- ML consulting and engineering services designed to meet your specific needs

- AI agent development services to bring your business automation to the next level

- Transparency and communication throughout the whole process

Our experience in building efficient architectures for AI products of different complexities allows us to solve any challenge. Get in touch with our team today to get a detailed estimate and launch your AI product. It’s time to bring your vision to life!