Contents:

There is no instruction to a decision making process. However, important decisions are usually made by analyzing tons of data to find the optimal way to solve a problem. That’s where we truly rely on logic and deduction. That’s why surgeons dig into anamnesis, or businesses gather key persons to see a bigger picture before making a turn.

Relying on AI decision making can significantly reduce the time spent on research and data gathering. But, as the final decision is up to the human, we still need to understand how our support system came up with these insights. So in this article we’ll discuss AI explainability and why it’s important for such areas as healthcare, jurisprudence, or finance to justify any piece of information.

What is explainability in AI?

AI decision-support systems are used in a range of industries that base their decisions on information. This is what’s called a data-driven approach – when we try to analyze available records to extract insights, and support decision makers.

The key value here is data processing, something a regular person can’t do fast. On the flipside, neural networks can grasp enormous amounts of data to find correlations and patterns, or simply search for the required item within an array of information. This can be applied to domain data like stock market reports, insurance claims, medical analysis, or radiology imagery.

The problem is that, while AI algorithms can derive logical statements from data, it remains unclear what these determinations were based on. For instance, some computer vision systems for lung cancer detection show up to 94% accuracy in their predictions. But can a pulmonologist rely just on model prediction without knowing if it is not mistaken tumor for fluid?

This concept can be represented as a black box, as the decision process inside the algorithm is hidden and often can’t be understood even by its designer.

Block box concept illustrated

AI explainability refers to techniques by which a model can interpret its findings in a way humans can understand. In other words, it’s a part of AI functionality that is responsible for explaining how the AI came up with a specific output. To interpret the logic of AI decision processes, we need to compare three factors:

- Data input

- Patterns found by the model

- Model prediction

These components basically are the essence of AI explainability implemented into decision-making systems. The explanation in this case, can be defined as how exactly the system provides insights to the user. Depending on the implementation method, this can be presented as details on how the model derived its opinion, a decision tree path or data visualization.

AI decision-support system types

Decision-support systems can be categorized by purposes and audiences. But we can split them into two large groups: consumer and production-oriented.

Consumer-oriented AI workflow

The first group of consumer-oriented systems unites AI decision making systems that are generally used for everyday tasks. Tools like recommendation engines don’t require explainability as it is. We don’t question the logic behind music recommendations made by neural networks. Unless it suggests complete garbage, it’s unnecessary to know how the system picked that song and brought it to you. You’ll probably just listen to it.

Production-oriented AI workflow

Production-oriented systems, in contrast, usually deal with critical decisions that require justification. This is like how a person in charge of a final decision is responsible for any possible financial loss or harm. In this case, the AI model acts as assistant for professional activity, providing explicit information for a decision maker.

So now, let’s look at the examples of explainability in production-oriented AI systems and how it can support decision makers in healthcare, finance, social monitoring, and other industries.

Explainable AI for medical image processing and cancer screening

Computer vision techniques are actively used in the processing of medical images, such as Computed Tomography (CT), Magnetic Resonance Imaging (MRI), and Whole-Slide images (WSI). Typically, these are 2D images used for diagnostics, and surgical planning, or research tasks. However, CT and WSI can often depict the 3D structure of the human organism reaching enormous size.

For example, WSI of human tissues can reach 21,500 × 21,500 pixel size and even more. Efficient, fast and accurate processing of such images serves as the basis for early detection of various cancer types, such as:

- Renal Cell Carcinoma

- Non-small cell lung cancer

- Breast cancer lymph node metastasis

The doctor’s task in this case, is to strip the information down to visually analyze the image to find suspicious patterns in the cellular structure. Based on the analysis, the doctor will provide diagnosis, or make a decision for surgical intervention.

However, since WSI is a really large image, it takes heaps of time, attention and qualification to analyze. Traditional computer vision methods will also require too much computational resources for end-to-end processing. So how can explainable AI help here?

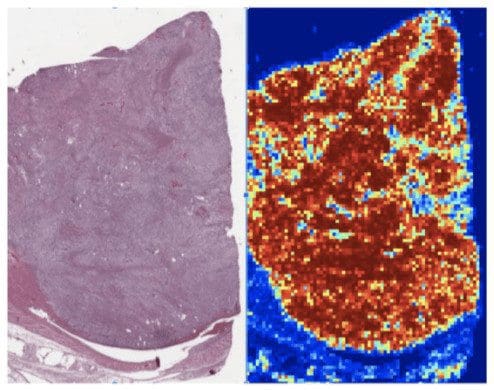

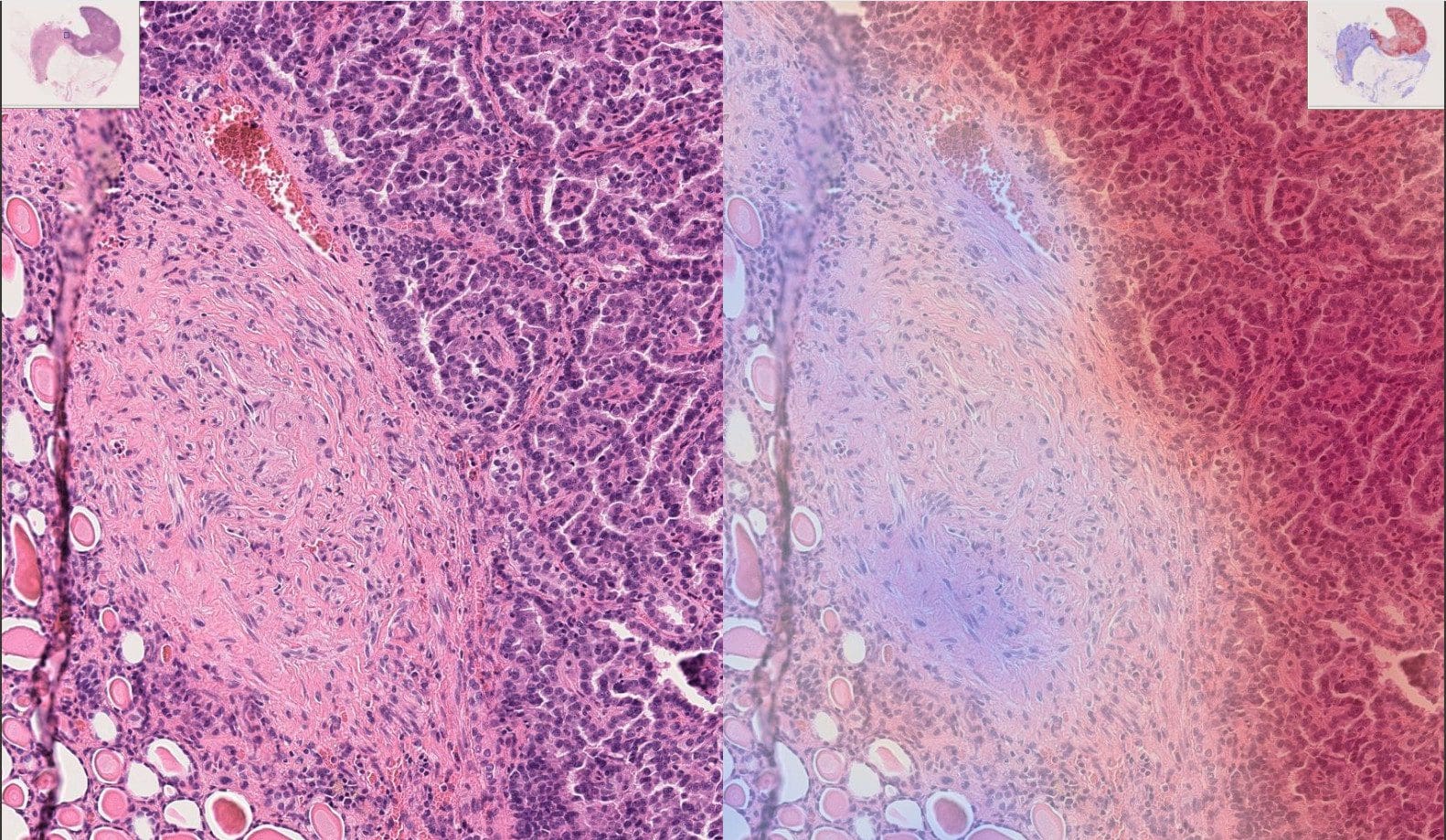

In terms of WSI analysis, explainable AI will act as a support system that scans image sectors and highlights regions of interest with suspicious cellular structures. The machine won’t make any decision, but will speed up the process and make the work of a doctor easier. This is possible because WSI is more accurate and quicker in terms of image scanning, giving less chance to omit specific regions. Here is an example of renal clear cell carcinoma on the low resolution WSI.

Kidney renal clear cell carcinoma is predicted to be high risk (left), with the AI-predicted “risk heatmap” on the right; red patches correspond to “high-risk” and blue patches to “low-risk”.

As you can see on the image on the right, the AI support system highlights the regions of high risk where cancer cells are more likely to be. This eliminates the need to physically analyze the whole image of a kidney, providing hints for medical expertise and attention. Similar heatmaps are used for even closer and more high resolution analysis of kidney tissue.

Example of WSI kidney tissue in high resolution (left), with the AI-predicted attention heatmap on the right; Blue color indicates low cancer risk zones, red — high risk.

The advantage of such systems is not only in operational speed and greater accuracy of analysis. The image can also be manipulated in different ways to provide a fuller picture for diagnostics and to make more accurate surgical decisions if needed. You can check the live demo of kidney tissue WSI analysis to see how the interface looks and how exactly it works.

Explainable AI for text processing

Text processing is a standalone task, as well as a part of bigger workflows in different industries such jurisprudence, healthcare, finance, media, and marketing. There are plenty of techniques for deep learning that are capable of analyzing text from different angles. Here we can list natural language processing (NLP), natural language understanding (NLU), and generation (NLG). These algorithms can classify text by its tone and emotional shades. It can also detect spam, produce a short summary, or generate a large contextual text based on a small initial piece.

Besides use in intelligent document processing, machine translation, and communication technologies, there are other applications based on explainable AI.

Self harm or suicide prediction

One of the applications of text classification was found in Meta. Facebook employees have developed an algorithm that allows you to determine users’ suicidal moods based on an analysis of the text of their posts, as well as responses to these posts of relatives and friends.

If suddenly the system gives a signal that there is a high probability that a person can harm himself, then this information is submitted to the community operations team for manual processing and verification. Explainable AI supports teams with constant scanning of media fields to find risky commentaries. After that, the human agent can use data explanations presented by the model that will point to how the machine understood if that unique person is showing the risk for suicide.

Financial news mining

Another interesting case is, for example, the use of financial news texts in conjunction with fundamental analysis to forecast stock values. A prime example of this would be “trading on Elon Musk’s tweets,” whose tweets had a dramatic effect on the value of several cryptocurrencies (Dogecoin and Bitcoin), as well as the value of his own company. The point is that if someone can impact stock prices so much through social media, it is worth knowing about these posts in advance.

AI algorithms allow you to process a huge number of news headlines and texts in the shortest possible time by:

- analyzing the tonality of the text

- identifying the runoff or company referred to in the article

- identifying the name and position of the specialist quoted by the publication

All this allows you to quickly make decisions on a purchase or sale, based on the summary of social media information. In other words, such AI applications can support individuals and businesses in their decision by supplying information about recent events that could impact stock prices and different fluctuations in the market.

The explainability part is responsible for justifying the data by recognizing company, event, persona, or resource entities with named entity recognition technology. This allows the decision-support system user to understand the logic behind the model’s prediction.

What do we need to make the work of AI algorithms with text understandable? We need to uncover the patterns AI “saw” in the text. We want to see what words and connections between the words that the algorithm relied on, proving its conclusion about the tonality of the text.

How AI explainability works in text processing

Consider explainable AI using the example of financial news headlines of articles. Below will be several headings and the reader is invited to assess the tonality of these headings (positive, neutral, negative), as well as try to track and reflect on which words in the title you, as a reader, relied on when you assigned the title to a particular group:

- Brazil’s Real and Stocks Are World’s Best as Foreigners Pile In

- Analysis: U.S. Treasury market pain amplifies worry about liquidity

- Policymaker Warns of Prolonged Inflation due to Political Crisis

- A 10-Point Plan to Reduce the European Union’s Reliance on Russian Natural Gas

- Company Continues Diversification with Renewables Market Software Firm Partnership

Now let’s compare your expectations with how these headers classify AI and see what words were important for the algorithm to make a decision.

In the pictures below, the words on which the AI algorithm relies in making a decision are highlighted. The redder the shade of the highlight, the more important the word was in making the decision. The more the shade was blue, the more the word tilted the algorithm toward making the opposite decision.

1. Brazil’s Real and Stocks Are World’s Best as Foreigners Pile In

Class: Positive

2. Analysis: U.S. Treasury market pain amplifies worry about liquidity

Class: Negative

3. Policymaker Warns of Prolonged Inflation due to Political Crisis

Class: Negative

4. A 10-Point Plan to Reduce the European Union’s Reliance on Russian Natural Gas

Class: Neutral

5. Company Continues Diversification with Renewables Market Software Firm Partnership

Class: Positive

How long did it take you to read all these headlines, analyze and make a decision? Now imagine that AI algorithms can do the same, but hundreds of times faster!

How to integrate Explainable AI features into your project?

One might think that integration of explainable AI into a project is quite a sophisticated and challenging step. Let us demystify it.

In general, from the perspective of explainability, AI systems may be classified into two classes:

- Those which support explainability out-of-the-box

- Those which need some third-party libraries to be applied to make them explainable

The first class of AI includes instance and semantic segmentation algorithms. AI algorithms based on attention are good examples of self-explainable algorithms we’ve shown above based on examples of WSI kidney tissue cancer risk images.

The second class of AI algorithms in general doesn’t support out-of-the-box self-explainability. But this doesn’t make them completely unexplainable. As an example of such an algorithm we’ve shown text classification above. We can use 3rd-party libraries on top of AI algorithms that make them explainable. Usually it is easy to use LIME or SHAP libraries to make your AI explainable. However, the explainability requirements may differ on the project or user objectives, so we suggest you enlist the support of an AI expert’s team to receive proper consulting and development.

Read also:

Business Owner Guide to AI App DevelopmentAI-explainability enhances trust

One of the key factors that prevents mass adoption of AI is the lack of trust. Decision-support tools in data driven organizations already perform routine tasks of reporting and analysis. But at the end of the day, these systems are still not capable of providing a justified opinion on business critical or even life critical decisions.

Explainable AI is a step further in data-driven decision making, as it breaks the black box concept and makes the AI process more transparent, more verifiable and trusted. Depending on the implementation, a support system can provide more than just a hint, because as we track down the decision process of an AI algorithm, we can uncover new ways to solve our task, or be provided with optional types of decisions.