Contents:

For retailers, navigating the complexities of eCommerce can be daunting, especially when faced with high return rates due to issues like incorrect fit, color discrepancies, and unmet product expectations. According to the National Retail Federation (NRF) and Happy Returns, the average eCommerce return rate was 16.9% in 2024, with clothing being a top category.

Virtual try-on technology presents a promising solution to reduce these return rates, yet it demands a level of technical expertise and customization that off-the-shelf products often fail to provide.

This article is designed to assist retailers in outlining the essential components and considerations for building tailored virtual try-on solutions. In this guide, we will delve into how the virtual try-on technology works, examine the different types of solutions, and provide insights on what is necessary for successful development and integration of your own app for virtual product try-on.

What is Virtual Try-On Technology and How Does it Work?

Virtual try-on technology employs computer vision, machine learning, and augmented reality technology to enable customers to visualize how products like clothing, eyewear, or makeup will look on them without needing to physically try them on. This innovation helps reduce the uncertainty and dissatisfaction often associated with online shopping by providing a realistic preview of a product’s fit and appearance. Consequently, it enhances the shopping experience, increases customer confidence, and can significantly reduce return rates.

The latest advancements in AI of AR technologies has made virtual try-ons a popular feature for e-commerce applications, providing different types of solutions.

Some virtual try-on apps offer real-time interaction, allowing users to observe products on themselves using a live video feed from their camera. These systems leverage computer vision algorithms to continuously track user movements, providing an engaging and immersive experience.

Conversely, non-real-time virtual try-on solutions enable users to upload a photo or video of themselves, viewing the product in a static image or video format. This method is ideal for scenarios where real-time interaction isn’t necessary, such as on e-commerce product pages or in-store kiosks.

Both real-time and non-real-time solutions offer unique advantages, and the choice between them depends on the specific application and use case. For example, non-real-time solutions typically provide better precision.

Types of Virtual Try-On Software Solutions

As retailers look to make online shopping more personal and engaging, virtual try-on technology has grown to cover more than just clothes and makeup. As a result, there are many types of virtual try-ons for different products.

Let’s consider the specifics of building different types of virtual try-on products.

Virtual Try-On for Watches

When it comes to virtual try-on for watches, ARKit and ARCore have some limitations. ARKit offers full-body tracking; however, it does not support wrist tracking separately, while ARCore lacks full-body tracking altogether. This means that to accurately track and display watches, additional AI expertise is often needed. Ready-to-use hand recognition models can be helpful for this purpose.

Using AR markers — symbols and patterns printed on a band worn on the wrist — serves as an effective approach to implementing virtual try-ons for watches. The computer vision algorithm processes these markers to correctly render a 3D watch. Although this technology has limits, like the need to print the markers, it can still produce high-quality virtual try-on experiences if it fits the business use case. The key challenge then is to create accurate 3D models of the watches.

Virtual Try-On for Shoes

For virtual try-on shoes, we need to use foot pose estimation models. This might require creating and training custom models since it’s less common than hand estimation. Basically, virtual try-on for shoes uses deep learning to estimate foot position in 3D space. This is similar to full-body 3D pose estimation models that find key points in 2D and then convert them to 3D coordinates. Once the foot’s 3D key points are detected, they can be used to make a 3D model of the foot and adjust the size and position of the shoe model to fit properly.

Positioning of a 3D model of footwear on top of a detected parametric foot model (source)

Compared to the full-body/face pose estimation model, foot pose estimation faces specific challenges primarily due to the lack of 3D annotation data necessary for effective model training. To address this, one optimal solution involves using synthetic data, which entails rendering photorealistic 3D models of human feet with key points and training the model with this data. You can also opt to use photogrammetry which supposes the reconstruction of a 3D scene from multiple 2D views to decrease the number of labeling needs.

This kind of solution is way more complicated. To enter the market with a ready-to-use product, it is required to collect a large enough foot keypoint dataset (either using synthetic data, photogrammetry, or a combination of both), train a customized pose estimation model (that would combine both high enough accuracy and inference speed), test its robustness in various conditions and create a foot model. We consider it a medium-complexity project in terms of technologies.

Virtual Try-On for Glasses

FittingBox is an example of a startup that has utilized AR for glasses virtual try-on technology. In their implementation, the user chooses a glasses model from a catalog and then they can see what it looks like on their face.

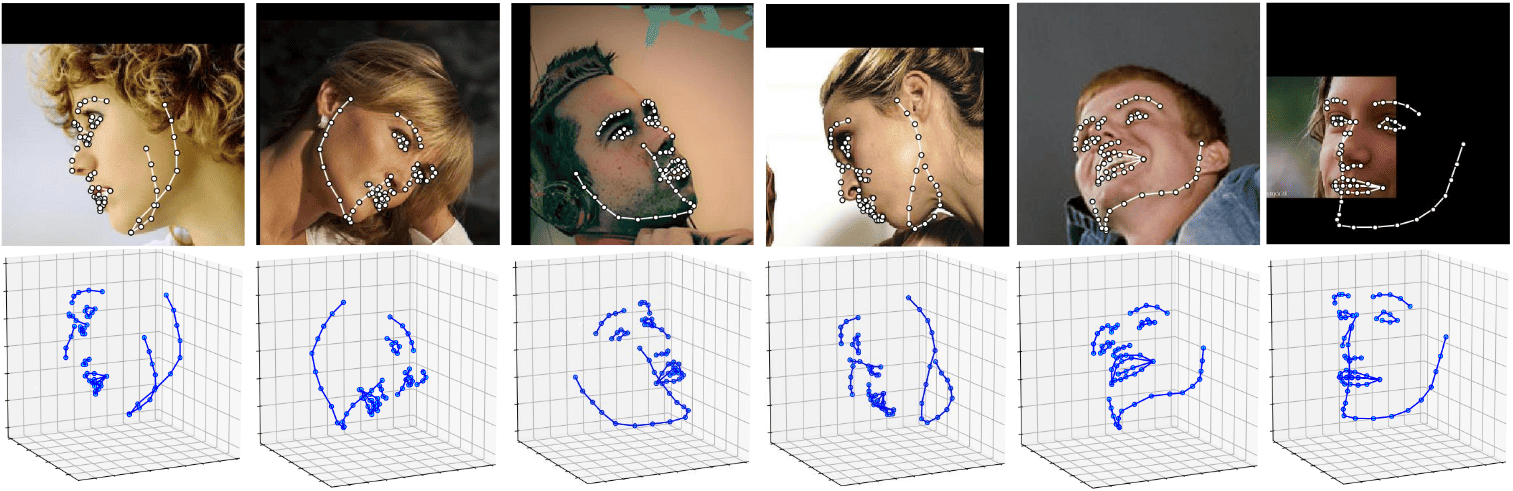

To accomplish this, the app utilizes a deep learning-powered pose estimation approach. This uses facial landmarks detection, where the common annotation format includes 68 2D and 3D facial landmarks. This annotation format allows the software to differentiate face contours, noses, eyes, eyebrows, and lips at high accuracy. To make it work, data is needed to train the face landmark estimation model. The data could be taken from open-source libraries like Face Alignment, which provides face pose estimation functionalities out of the box.

Virtual glasses try-on isn’t that complicated, especially when considering pre-trained models for the facial recognition portion of the application. As a result, you can easily use existing mobile SDKs for the most basic features. However, a more sophisticated approach will require AI expertise if you’re doing anything fancier. Remember, low-quality cameras and poor lighting conditions will affect the accuracy and quality of the experience.

Later in the article, we will talk about MobiDev’s experience with one of such projects (Case Study section).

Virtual Try-On for Hats

Another frequently simulated AR item is hats. For a hat to be rendered correctly on a person’s head, the 3D coordinates of key points like the temples and the center of the forehead are needed. Basic solutions for this can often be developed using AR frameworks.

Makeup Virtual Try-Ons

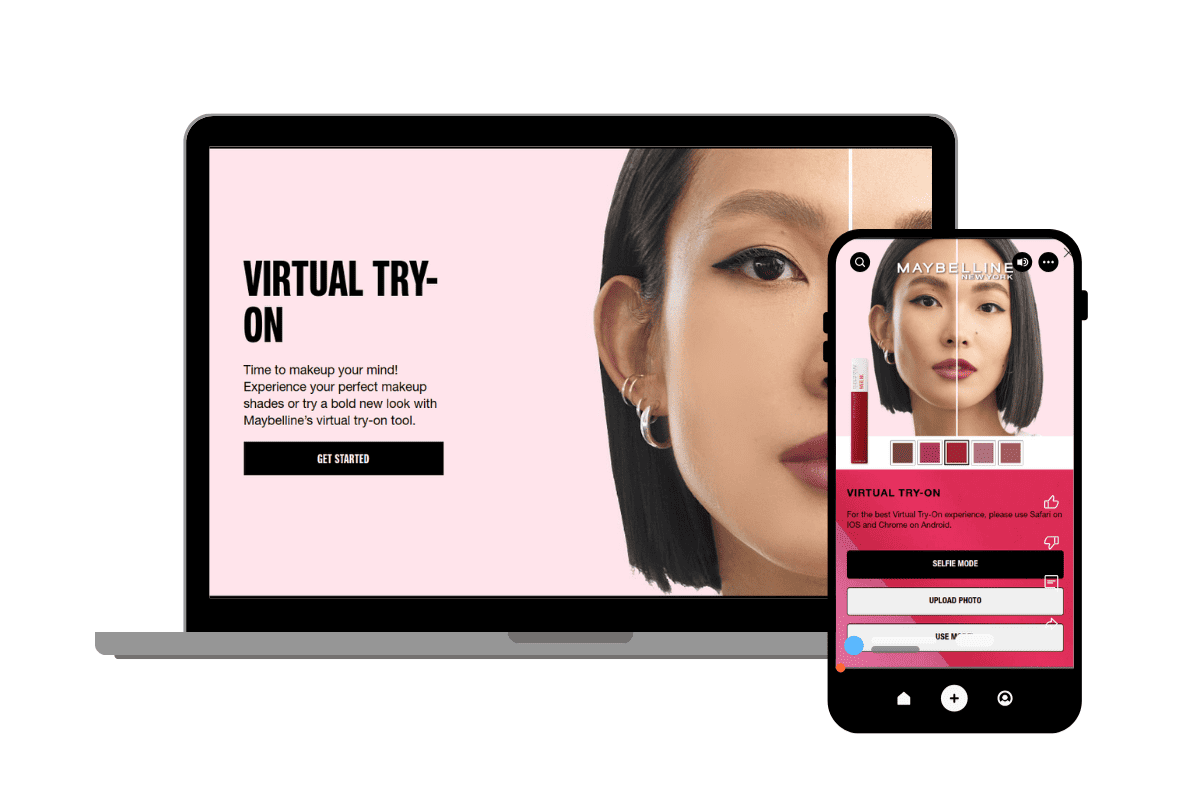

Makeup virtual try-ons have become very popular with beauty lovers and shoppers, changing how makeup products are chosen and bought. Maybelline’s Virtual Makeover gives users various virtual try-on tools, like foundation shade finders and mascara quizzes, making the makeup experience interactive and personalized. Fashionista has reviewed multiple virtual beauty try-on platforms and emphasizes how realistic results help boost user satisfaction and confidence in their makeup choices.

These innovative tools are fun and educational, letting users explore makeup options, try new looks, and make smart buying decisions. Users can test makeup looks in real-time using their device’s camera or by uploading photos or videos.

The real-time mode shows the user wearing virtual makeup as they move and interact, greatly enhancing the shopping experience. Some virtual try-ons offer makeup tutorials and beauty tips to help users achieve specific looks or styles. For instance, L’Oreal’s solution allows customers to see how different colors of lipstick, mascara, eyeshadow, and foundation will look on them before they buy.

These solutions often need AI technology and AR frameworks to correctly segment the face zones, using pre-trained models for basic features. For example, we can use ARFaceGeometry to get the detailed topology of a face for further application of virtual makeup. Having a mesh of the face (like in the GIF below), it’s easy to use this information to align cosmetics properly. This ensures a smooth and realistic virtual makeup experience.

Virtual Try-On for Clothes

Virtual fitting room technology lets customers use devices with cameras through a special app to explore products in detail. These products can be overlaid onto the customer’s own image to help them see if they fit well, including tools to measure their body and suggest the best size. This interactive experience helps customers make informed buying decisions.

Creating virtual try-on 3D clothing solutions is tougher than for shoes, hats, glasses, and watches. Clothes change shape to fit a person’s body, needing a deep learning model to recognize not only key points on the body’s joints but also the body shape in 3D. Models for pose estimation and body segmentation exist to meet business needs.

However, sometimes these key points are not enough for specific tasks, like in the case of underwear, requiring custom models. The type of virtual try-on solution matters too. A “magic mirror” in a store might be easier to manage since it has a fixed camera at a set angle. But with smartphones and tablets, the task is harder due to changing camera positions, making pose estimation more challenging.

Virtual Try-On for Pets

A virtual try-on for pets lets pet owners see how different products like clothes, accessories, or grooming styles look on their pets by using pictures or videos. This technology uses augmented reality or computer vision to place these items on the pet’s image, showing the owner what the product will look like on their furry friend.

In 2021, Chewy, an online pet supply store, introduced the “Fur-tual Boutique” virtual try-on feature. Dogs and cats could virtually try on seven popular Halloween costumes from Frisco, such as Cowboy, Killer Doll, Granny, and Superhero. This type of application uses technologies like image recognition, object tracking, and AR to correctly show the products in the pictures or videos of the pets. However, unlike humans, models for pets are not as readily available. Depending on the type of animal, a custom model will have to be created.

Virtual Try-On for Furniture

Virtual try-on for furniture is increasingly becoming a valuable tool for both retailers and customers in the furniture industry. The technology enables real-time visualization, allowing customers to move, rotate, and place virtual furniture within their physical environment. They can view the furniture from different angles and in various positions. Customers can adjust the size and scale of virtual furniture items to ensure they fit perfectly within their space. This helps prevent issues related to furniture that is too large or too small for a room.

Some virtual try-on platforms allow users to customize furniture items by selecting different colors, materials, and finishes to match their preferences and decor. At MobiDev, we built an AR-powered room measurement solution for our client. It allows users to visualize the reconstruction in augmented reality and simultaneously compare different material options to help with their choices.

However, it is important to understand the limitations of this technology. Virtual try-on for furniture often struggles with real-time rendering accuracy, particularly when dealing with varied lighting conditions, room layouts, and furniture textures. Achieving a seamless integration of virtual furniture into a user’s physical space requires advanced AI algorithms to handle object detection, spatial awareness, and occlusion effects.

As a result, the system must be capable of adjusting the virtual items’ dimensions, materials, and colors to match the room accurately. Developing such sophisticated applications demands significant resources, including high-quality 3D models, extensive training data, and expert knowledge in computer vision and AR app development. This comprehensive approach ensures that the virtual try-on experience is both realistic and functional, ultimately enhancing customer satisfaction and confidence in their purchasing decisions.

Developing an AR Virtual Try-On Platform: General Process

Different types of virtual try-on solutions require various technologies and approaches. While some can be developed with AR frameworks like ARKit and ARCore, more complex cases may face limitations with these tools. Based on our experience, ARKit suffices for the simplest facial recognition architecture. However, advanced projects necessitate AI app development to meet specific goals, such as face and hair segmentation for virtual try-ons involving different hair colors.

Technologically, virtual try-on projects might require AR technology for basic visualization and additional AI technology for calculations.

The general process for building a virtual try-on solution is typical and includes the following stages:

- Planning: outlining the project scope and goals

- Feature Selection: deciding on the functionalities needed

- UI/UX Design: creating user-friendly interfaces

- Development: building the actual solution

- Testing: ensuring the solution works correctly

- Deployment: launching the solution

- Support & Updates: providing ongoing maintenance and improvements

Ready-Made vs Custom Virtual Try-On Solutions

Despite the fact that there are ready-made virtual try-on solutions on the market, they don’t always meet the unique needs of a business. Here are a few things to consider:

- Ready-made solutions aren’t always available or offer the highest quality experience. Plus, they may not be as flexible as you wish.

- A custom-made solution offers a higher quality experience and is more cost-effective in the long term.

- Utilizing custom solutions also helps you avoid ongoing fees associated with ready-made options.

Each solution requires careful consideration and technical proficiency to achieve the desired functionality and performance.

AR Consulting Services

Find the most effective approach to turn your idea into a market-ready product

Learn moreVirtual Try-On App Development: Considerations

When developing virtual try-ons, it’s essential to follow best industry practices and be ready to tackle certain considerations. These are not necessarily challenges, but rather aspects that require careful attention to achieve optimal results.

Optimizing Application Performance

To make sure your app works well, you need to follow some rules. For example, when using video, it should stay steady at 25 frames per second at least. This is important because a stable framerate ensures smooth and visually appealing video playback. For images, the wait time for users should be no more than one or two seconds.

When checking how well the app runs, it’s tricky to see how it will work on different devices. The best way is to test the final app on many kinds of devices, not just high-end phones. Cloud computing offers a lot of power, but it has issues too. The app needs a fast Internet connection. Without good Internet, the app might not work smoothly, resulting in bad experiences for customers.

Data Privacy

Data privacy is a critical concern, especially in today’s digital age. Statista stated that 2024 saw over 1.12 billion data breaches worldwide. Modern app users are increasingly aware of privacy issues, and sharing photos or videos, particularly of tight-fitting clothing, can raise serious concerns. To comply with regulations, it’s crucial to implement a proper consent mechanism for users to approve the use of their data.

While cloud computing presents new development opportunities, it also introduces potential security risks that demand additional precautions and expertise. A simpler and potentially safer approach is to perform computations directly on the user’s device.

Creating 3D Models

Obtaining high-quality 3D models is crucial for your virtual try-on app’s success. There are different ways to achieve this. You can invest in a 3D scanner with software to create great models. Another option is to hire a skilled 3D artist to make the models. Both choices come with extra design costs. Creating 3D models may take from 30 minutes to several hours or even days depending on the complexity.

These models should be stored in a cloud and downloaded to the user’s device when needed since they can be quite large. Consider offering two LODs (Levels of Detail) for the models – low and high. The low-quality model is smaller and can be quickly downloaded and shown to the user. The high-quality model can be downloaded in the background and used when ready.

Remember, developing a try-on solution requires a lot of technical skill to ensure good quality. It’s also important to have realistic expectations for your product and understand the technology’s limitations.

Success Story: Sunglasses Try-On for a Fashion Boutique

Let us share MobiDev’s experience in building virtual try-on solutions with the following case study.

Business Goal

An owner of a USA-based fashion boutique chain (brand under NDA) approached MobiDev to enhance mobile shopping experience in the existing iOS and Android applications. The goal was to enable customers to try on sunglasses online, boosting engagement and driving sales. Since most users used the iOS app, we decided to start with the MVP version for iOS and then expand to Android to reach a broader audience.

How We Delivered

To achieve the business goal, we needed to address two key tasks: face tracking and realistic rendering, ensuring that the products closely resemble their real counterparts. Given that the existing iOS app was built in Swift, we decided to use the ARKit framework, specifically ARFaceAnchor. We also considered the future implementation of the same feature for the Android version of the app, planning to use the ARCore framework and AugmentedFaces.

Both ARKit and ARCore are robust, free frameworks that offer excellent performance. Since the MVP version of the try-on feature initially supported only iOS, we will focus on that platform for now. However, rest assured that the approach is quite similar for both platforms.

Face Tracking

Using the ARFaceAnchor it was easy to get the transform property that described the face’s current position (where it is) and orientation (how it is rotated). This property already gave us the information needed to attach virtual content to the user’s head.

However, as you might have already asked yourself, people have different heads, right? How can we position the sunglasses properly? To resolve the issue we used so-called Face Topology as well as leftEyeTransform and rightEyeTransform matrices to get additional information about a user’s face size and proportions to position the glasses precisely and choose the proper size of the frame. Luckily ARKit uses a metric measurement system which is very useful for these tasks.

Rendering

When we got the sunglasses positioned and sized properly, we proceeded to the second major task – realistic rendering. As a rendering framework, we used SceneKit, which offers a great balance between flexibility and ease of use and works perfectly together with ARKit.

To achieve realistic rendering we needed to use PBR (Physically Based Rendering), high-quality materials of 3D objects, and realistic lighting. ARKit provides light estimation. Moreover, when we use ARFaceTrackingConfiguration we get ARDirectionalLightEstimate, which indicates not only the intensity of light in the scene, but also the direction it comes from. This is made possible by the previously mentioned face topology. A specialized AI algorithm analyzes the shadows on the face and, by understanding its shape (or topology), estimates the location of the light source.

Having added an environment texture we got a nice reflection for the glossy frame and the lenses.

Occlusion

And here’s the icing on the cake: occlusion. When a user turns their head, the glasses’ temples should be covered by the face. Since AR overlays objects onto real-world video, we need to “trim” the portions of a 3D object that shouldn’t be visible. This creates the illusion that the face is covering the temple, which is the essence of occlusion.

Outcomes

The MVP solution we created enabled our client to accelerate time to market and begin collecting user feedback, allowing for iterative improvements for both the iOS and future Android versions. The try-on feature yielded positive results, enhancing user engagement and leading to higher conversion rates, ultimately boosting sales and revenue.

Furthermore, we added a geofencing feature to the application, enabling targeted notifications with special offers for users who had tried on glasses online and were near a physical boutique.

MobiDev: Virtual Try-On Consulting & Development Services

With rich technological expertise and extensive experience in building software solutions for eCommerce, MobiDev has refined the best AR and AI practices for virtual try-on app development. We adapt to your unique business needs to create exciting solutions for your clients. By leveraging our knowledge in AR and AI, we help you overcome challenges like varying device performance and real-time animation requirements.

Our AR consulting services transform your visionary ideas into feasible solutions you can develop with confidence. By thoroughly analyzing your needs, we’ll recommend a technological pathway that delivers measurable results for your business. Our skilled AR developers and AI engineers are ready to assist in turning these recommendations into a market-ready product, expanding your audience even to low-performance devices. Let’s discuss your needs today!