Contents:

For industries seeking to enhance precision, efficiency, and innovation, LiDAR technology offers transformative solutions. LiDAR is a sophisticated remote sensing technology that MobiDev has extensively utilized in computer vision projects since 2019. This article delves into the LiDAR-based application development process, providing valuable insights and practical knowledge.

What follows primarily focuses on the nuances of standalone LiDAR applications rather than integrated LiDAR on iOS devices. Let’s start by getting an overview of the differences between these two applications of LiDAR.

What is LiDAR and How Does it Work?

Light Detection and Ranging (LiDAR) is a depth-sensing technology that can derive three-dimensional information about the shape and characteristics of objects in a scene. To achieve this, pulsed laser light is emitted from the scanner, which then reflects off the targets in the scene. This reflected light is then captured by the sensor. By calculating the time it takes for this light to reflect and return, also called ”time of flight”, LiDAR can provide precise spatial data. This can greatly enhance the accuracy of measurements and environmental modeling compared to other technologies.

There are two primary domains of LiDAR technologies: integration with mobile devices and standalone applications.

LiDAR as a Depth Sensor on iOS Devices

You are probably quite familiar with LiDAR if you have used an iPhone or iPad Pro for the past few years. LiDAR scanners can be found on the Pro and Pro Max models of iPhone 12 and later, and the 11-inch and 12.9-inch iPad Pro models from 2020 and later. Apple’s hardware leverages advanced depth-sensing technology for superior AR experiences, including measurement applications like room scanning.

The LiDAR scanner powers the capabilities of ARKit, the AR development framework, by calculating the time infrared lasers take to reflect off surfaces and return, creating precise spatial meshes. This enhances AR measuring applications’ accuracy and reliability.

To learn more about our work with integrated LiDAR and other mobile depth sensing technologies, check out our guide on AR measurement applications.

LiDAR As a Standalone Device

LiDAR isn’t just integrated into mobile devices; it can also exist as a standalone technology. It operates independently as an advanced remote sensing technology, using pulsed laser light to measure variable distances to the Earth or other targets, creating precise, three-dimensional information about the shape of the Earth and its surface characteristics. Standalone LiDAR systems can be mounted on various platforms, such as aircraft, drones, or vehicles, and even handheld units. Unlike the integrated LiDAR sensors in smartphones, these standalone systems are often used in various fields, including topographic mapping, forestry, environmental monitoring, and autonomous driving.

Leica LiDAR scanner used to create 3D scans of structures (David Monniaux, CC BY-SA 3.0 via Wikimedia Commons)

Standalone LiDAR systems generally offer greater range and precision compared to smartphone-integrated sensors, capable of covering larger areas and providing more detailed data. Common applications include creating detailed maps of the Earth’s surface in topographic mapping, measuring tree heights, canopy density, and forest structure in forestry, assessing coastal erosion, flood plains, and other environmental changes in environmental monitoring, and enabling autonomous vehicles to navigate by detecting obstacles and understanding the environment. These systems include components such as laser emitters, GPS units, and inertial measurement units (IMUs) to provide accurate georeferencing and orientation.

Top 10 Applications and Use Cases of LiDAR

Let’s explore LiDAR further by taking a look at the top 10 use cases for LiDAR. We’ll explore both standalone LiDAR and mobile applications. Remember, these aren’t the only applications of LiDAR. MobiDev’s strong consulting and engineering expertise with LiDAR systems means we’re prepared for any application that you have in mind to turn it into a game-changing product.

Standalone LiDAR

1. LiDAR for Topographic Mapping

LiDAR technology can create highly detailed maps of the Earth’s surface, essential for accurate land surveying and efficient construction planning. By using time-of-flight measurements, LiDAR generates precise three-dimensional representations of terrain and surface features. This detailed data allows for thorough analysis and planning, ensuring that projects can be designed and executed with greater accuracy and reliability.

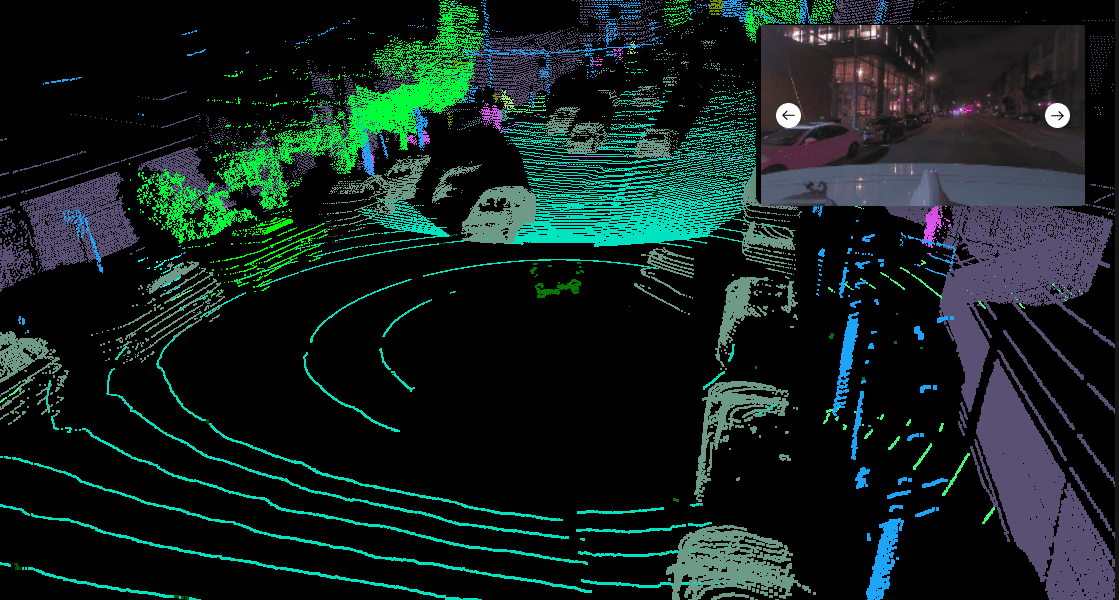

2. LiDAR for Autonomous Vehicles

Self-driving cars need to create high-resolution 3D maps of their environment. LiDAR scanners continuously scan the area, identifying obstacles and feeding this data to the vehicle’s navigation system, enabling informed decision-making and obstacle avoidance. The precise measurements and spatial awareness provided by LiDAR ensure safe and efficient navigation through complex environments.

3. LiDAR for Forestry Management

This technology enables precise measurement of tree heights, canopy density, and forest structure, providing essential data for forest management and conservation. This technology helps in monitoring forest health and detecting changes over time, supporting informed decision-making for sustainable practices. By generating accurate 3D models, LiDAR aids in habitat assessment, biodiversity studies, and the implementation of effective conservation strategies.

4. LiDAR for Environmental Monitoring

Assessing coastal erosion, flood plains, and other environmental changes is made much easier thanks to LiDAR devices. By capturing high-resolution topographic data, LiDAR enables detailed analysis of shoreline changes, helping authorities to design effective coastal protection measures. Additionally, it aids in floodplain mapping, providing critical information for disaster preparedness and land-use planning, ultimately supporting sustainable environmental management and protection efforts.

5. LiDAR for Archaeology

LiDAR’s ability to penetrate dense vegetation and other surface obstructions makes it a valuable tool in archaeology. The hardware’s laser pulses can reach the ground through tree canopies and undergrowth, allowing LiDAR to reveal hidden structures and landscapes that are otherwise invisible to traditional survey methods. This technology has revolutionized archaeological research, enabling the discovery of ancient cities, roads, and other features that were buried beneath thick foliage or modern developments.

Mobile LiDAR

1. LiDAR for Augmented Reality (AR)

Mobile LiDAR provides accurate depth information for AR applications, making digital overlays more realistic and interactive. This allows AR experiences to seamlessly integrate with the physical world, improving user engagement. By mapping the environment in real-time, LiDAR ensures that digital content aligns perfectly with real-world objects.

2. LiDAR for Interior Design and Renovation

Creating precise 3D models of indoor spaces, mobile LiDAR applications like Apple RoomPlan enable virtual walkthroughs and detailed remodeling plans. This accuracy helps designers and homeowners visualize changes before they are made, saving time and resources. By providing exact measurements, LiDAR ensures that renovations fit perfectly within existing structures.

3. LiDAR for Spatial Measurement

With the capability to measure distances, areas, and volumes quickly in real-time, LiDAR aids a variety of professional and personal tasks. This is valuable for construction, landscaping, and event planning, where precise measurements are crucial. The ease of obtaining accurate data with LiDAR streamlines workflows and enhances productivity.

4. LiDAR for Virtual Reality (VR)

Creating a virtual reality experience involves a lot of modeling. However, mobile LiDAR applications like Apple RoomPlan can transform real-life environments into 3D maps that could be used for VR spaces. The realistic spatial data that LiDAR provides ensures that virtual elements align with the user’s environment, enhancing the sense of presence. As a result, VR applications become more engaging and effective for training, gaming, and simulations.

5. LiDAR for Gaming

Incorporating real-world spatial data into game environments, LiDAR enables more interactive and engaging experiences. This technology allows players to navigate and interact with game worlds that mirror their physical surroundings, adding a new layer of realism. By integrating LiDAR data, developers can create dynamic and responsive gameplay that adapts to the player’s environment.

How to Develop a LiDAR-based Software Product

Taking advantage of LiDAR technology can be quite technical. However, understanding how it works can be beneficial for understanding the feasibility of LiDAR for accomplishing your business objectives. This section will dive into the more technical nuances of how LiDAR works and how to utilize it to its fullest potential.

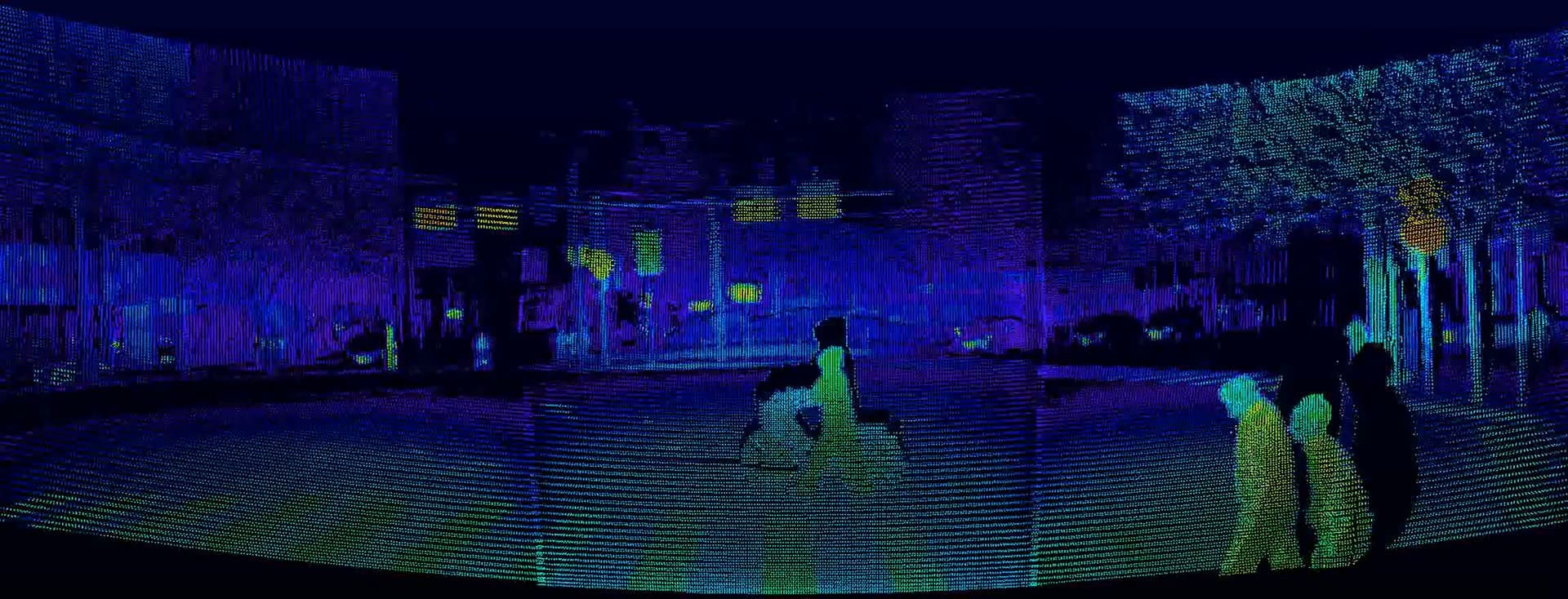

1. Working with a LiDAR Point Cloud

LiDAR sensors are similar to digital cameras in that their outputs are useless without additional processing. The ”RAW” camera data from a digital camera isn’t useful until it’s processed, and the same goes for LiDAR sensor data — the LiDAR point cloud. This is the 3D representation of what the sensor can see. This data may include:

- Coordinates (XYZ)

- Velocity

- Intensity

- Ambient light

2. Pre-Processing

The highest LiDAR point cloud resolutions may result in more than 65,000 points. As a result, point clouds need to be pre-processed to extract the most useful information. Bulk processing is rarely required, since point clouds fetch a lot of surrounding information that just slows down the rest of the system. Here are some of the most common pre-processing techniques we use on our LiDAR projects:

- Downsampling: reducing point cloud density to make processing more efficient. Best when original data is too dense. You can also use voxel grid downsampling or random sampling.

- Outlier removal: remove more extreme points that are caused by sensor noise or measurement errors.

- Ground removal: the ground typically dominates point clouds. Focusing on non-ground points can make systems more efficient.

- Normalization: align the point cloud data to a common reference frame or coordinate system. This process ensures consistency and facilitates the comparison of LiDAR data collected at different times or from different devices.

- Smoothing, cropping, & filtering: reduce noise and enhance overall quality.

- Transformations: by shifting, rotating along the axis, scaling, and mirroring the point cloud, you can effectively center or axis-align the data, as well as synchronize point clouds from various sources.

When the majority of LiDAR applications involve processing LiDAR output in real-time, pre-processing is essential to conserve resources and maximize performance. Which pre-processing techniques you choose will depend on your specific use-case.

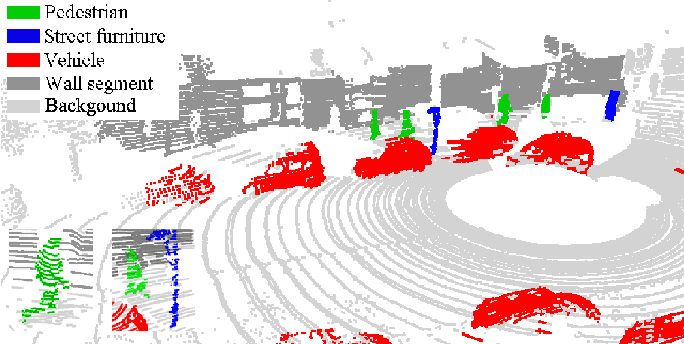

3. Segmentation & Object Detection

The potential of point clouds can be extended much further when combined with algorithms for segmentation and object detection tasks. Two approaches to achieve this are:

- Machine learning algorithms: radial search algorithms detect large clusters of points. They’re simple, quick and don’t require training, but require significant pre-processing.

- Neural networks: either pre-trained datasets (like voxelNet, PointCNN, or SparsConvNet) or custom models can have more accurate results and require less pre-processing. Neural networks also allow systems to perform more than one task at a time.

4. Object Classification

LiDAR point cloud data can be used to identify and classify objects based on their shapes. In some cases, this can make point clouds even more effective than traditional machine vision applications because LiDAR can’t see variances like color and other minor details that can confuse optical models. More importantly, utilizing publicly generated point cloud data can be more ethical since faces, license plates and other identifying information can’t be detected by the sensor.

Neutral networks can accomplish object classification using LiDAR point cloud data, either in 3D or 2D. Aside from the coordinates of the points, these networks can be informed by other data like intensity, ambient light, or reflective data. 2D models can be easily applied to most situations with a little fine tuning.

5. Movement Prediction and Tracking

Navigation and tracking in outdoor environments are some of the most common scenarios for commercial LiDAR devices. When using LiDAR systems in real-time, objects can be detected or segmented on each frame. However, we need to keep track of these objects over time. By providing a tracking ID to each object, we can have continuity between frames and even predict their trajectories.

Models like JPDAF, SORT, and DeepSORT can track multiple 3D objects out of the box, making them ideal for use in autonomous vehicles. Deep learning approaches like RNN and Graph Networks are a few alternatives.

6. LiDAR Integration

If you’re using a standalone LiDAR sensor, the device that you choose will determine the format and types of measurements that your software will have to work with. To interact with the device itself and extract the data output, we’ll need an API to accomplish the following:

- Connect to, set up, and configure the sensors

- Stream data from sensors to other software applications

- Record data and enable recordings to be read by other applications

- Visualize data

Other smaller configurations will vary depending on the sensor you use. The more sensors are in your setup, the longer the configuration process will take. Remember, all of the devices must be synchronized, and data must be converted into a common format.

Because of the time it takes for data to move around, processing must take place close to your device or devices. One solution is to utilize edge devices, small computers optimized for machine learning tasks. Edge devices can provide a wide range of benefits like real-time processing of data input, as well as bandwidth and energy efficiency. When configured correctly, they can also help you maintain regulatory compliance. Edge devices are designed to interact with other devices like multiple LiDAR sensors.

In our projects, we’ve used edge devices like Nvidia Xaviers to give the system mobility and implement offline modes of work. Since edge devices have fewer computing resources, we chose C++ to overcome platform limitations and integration issues. Since C++ is a low-level language, it can provide a faster computational speed compared to other languages.

Read also:

Edge Biometrics in SecurityOvercoming LiDAR Application Development Challenges

1. Occlusion

Although this data is extremely useful for spatial applications, it does come with its fair share of challenges. For one, LiDAR sensors aren’t magic — they can’t see through obstructions. If you need data for a more complete scene that has less occlusion, you may need multiple LiDAR sensors.

Additionally, you should consider that LiDAR isn’t a perfect replacement for other computer vision technologies. LiDAR sensors can’t see color, text, or very small details on objects. Combining these sensors with computer vision technologies can help better capture environments because of this.

2. Black Objects

Another well-known limitation of LiDAR is that it’s challenging to scan objects with black paint. These objects absorb more light, meaning that less of the laser’s energy is reflected back at the sensor. This corrupts the point cloud and can be dangerous for autonomous vehicles. This risk can be mitigated in much the same way as occlusion problems can — combining LiDAR with other kinds of sensors. To accomplish this, we developed a separate model that fills in missing areas with values to help detect black objects.

3. Framerate

Framerate can also be a limiting factor. Machine vision models set up for optical sensors can handle 30 frames per second (FPS), while LiDAR can only operate at 20 FPS. Dynamic environments need greater response times. Some approaches are:

- Multiple sensors can gather data in shifts. This requires careful data synchronization, but the output can be merged to generate a higher framerate.

- ML algorithms can perform frame interpolation, predicting what occurs between frames.

We’ve incorporated both approaches in our projects, and there are advantages to both. However, we’ve found that a multi-sensor setup is more reliable.

4. Real-Time Processing

As you’ve read previously, there are a lot of obstacles in our way when it comes to real-time processing, which accounts for the majority of LiDAR applications. Anything that can slow down the process will be detrimental to the final product. To combat this, we’ve developed a few best practices in our projects:

- Using low-level languages like C++ for codebases to provide the fastest performance

- System design optimization

- Choosing algorithms based on benchmarks and testing

- Selecting appropriate model architectures

We use similar optimization techniques for other applications like machine learning and computer vision, which use real-time video processing.

Enhancing LiDAR Technology with AI

Combining AI algorithms with LiDAR technology significantly enhances data analysis by enabling accurate 3D mapping and efficient data processing. This integration involves the following steps:

- Data preprocessing: Cleaning and organizing raw data to ensure quality inputs.

- Feature extraction: Identifying significant patterns and characteristics within the data.

- Object recognition: Recognizing and classifying objects based on extracted features.

- Data fusion with other sensors: Combining information from LiDAR with data from other sensors for a holistic view.

Some benefits of integrating AI with LiDAR technologies include:

- Enhanced object recognition ensures precise identification, vital for autonomous vehicles

- Improved anomaly detection aids in predictive maintenance and reduces downtime

- Optimization and real-time monitoring boost operational efficiency

- Predictive analytics offer strategic foresight

Some applications of this approach include autonomous vehicles, construction, agriculture, the energy sector, smart city planning, environmental monitoring, and industrial automation. Future trends point to sensor miniaturization, increased automation, faster data processing, and better object recognition accuracy.

While LiDAR data might seem less common for automating visual tasks, it doesn’t necessarily complicate or inflate project costs. Rather, LiDAR presents an alternative method for a computer to interpret its environment. In certain scenarios, LiDAR is even preferred over video or images due to the unique data properties that can beextracted and processed.

Let’s Build a LiDAR-based Product Together

At MobiDev, we continuously expand our LiDAR expertise to address challenges and meet optimization needs across various devices. Leveraging our extensive experience with both standalone LiDAR devices and other depth sensors, we offer comprehensive support from concept to launch, providing top-tier tech strategy consulting services and seasoned development teams to bring your vision to life.