Contents:

The integration of artificial intelligence into healthcare is often seen as the perfect way to improve patient care and adopt preventive medicine. However, when you require HIPAA compliance, it’s essential to understand how AI processes personal data and how to make it compliant.

Our guide will help you understand what it takes to build HIPAA-compliant AI software to handle sensitive data in the healthcare industry. You’ll get practical insights based on MobiDev’s 15+ years of experience in healthcare app development and my personal expertise in AI engineering.

So let’s find out how to combine AI and HIPAA properly.

Exploring HIPAA and AI in Healthcare

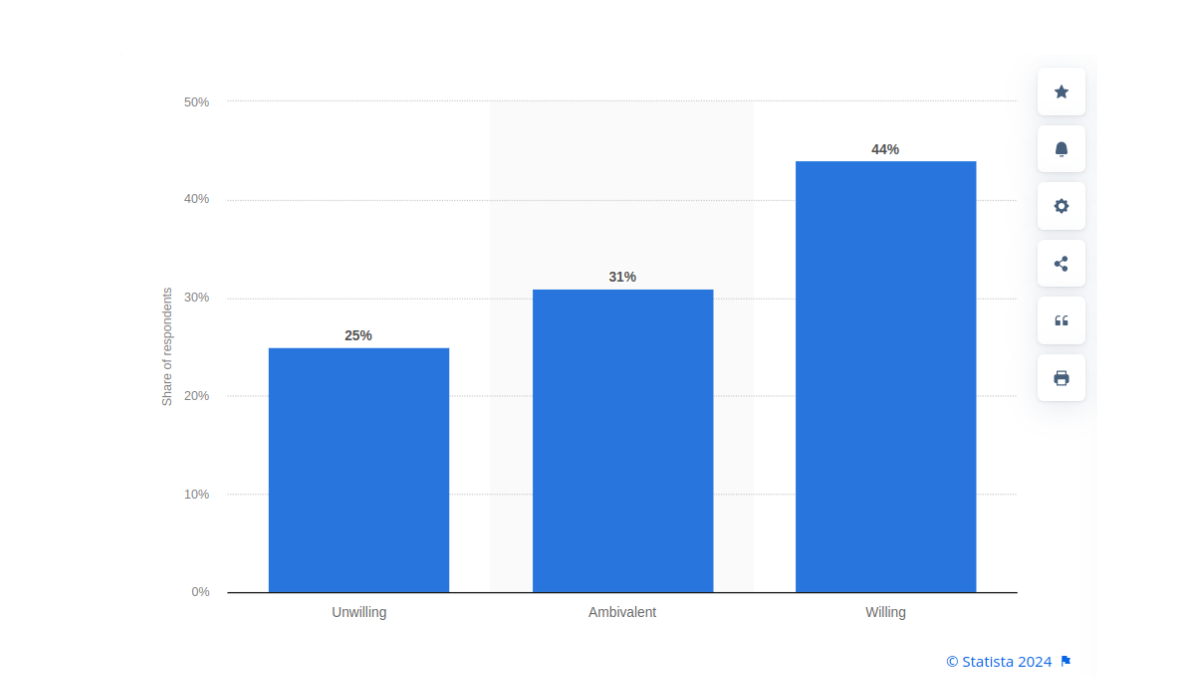

A global survey in 2022 showed that 44% of people were willing to accept AI systems in healthcare, while 31% remained ambivalent. As of 2024, this specific market is valued at $20.9 billion and is projected to reach $148.4 billion by 2029 indicating great interest in adopting AI in healthcare.

Artificial intelligence can analyze PHI and “healthcare adjacent data” to help healthcare providers and organizations provide better services for their patients. But is AI HIPAA compliant? It’s necessary to understand that AI isn’t automatically HIPAA-compliant and a lot depends on how it processes data.

HIPAA regulations are specifically applied to PHI, so it’s important to distinguish it from healthcare adjacent data:

| # | PHI | Healthcare Adjacent Data |

|---|---|---|

| 1 | Medical records | Data from fitness apps |

| 2 | Lab test results | Heart rate data from smartwatches |

| 3 | Insurance claims and billing information | Sleep patterns tracked by wearable devices |

| 4 | Prescriptions and medication records | Location data related to fitness activities |

| 5 | Immunization records | Blood pressure monitored by a home device |

| 6 | Patient demographics used in medical contexts | Data from menstrual cycle tracking apps |

As stated by The HIPAA Journal, “It is the responsibility of each Covered Entity and Business Associate to conduct due diligence on any AI technologies…to make sure that they are compliant with the HIPAA Rules, especially with respect to disclosures of PHI.”

This means that organizations under HIPAA are responsible for determining how to manage and disclose data used by AI systems. Since many third-party AI systems and APIs are not HIPAA compliant by default you may need to make additional efforts to protect your patients data without compromising the effectiveness of AI models.

How Is AI Used in Healthcare: Use Cases and Benefits

Although artificial intelligence became seemingly popular only during the past few years, it has been around for over a decade in the medical world. Take, for example, IBM’s Watson which successfully managed to diagnose lung cancer in 90% of cases compared to 50% for human doctors.

We recently shared popular use cases and benefits of AI in healthcare, but let’s take a brief overview.

Use Case #1: Predictive Analytics

Machine learning models can predict future health events or trends. This includes forecasting the chance of a patient developing a certain condition based on their medical history and lifestyle factors. Using this data ensures the patient gets preventive treatment and preserves their health.

Use Case #2: NLP for Unstructured Data

AI systems can automate the organization and management of patient data. Using NLP techniques artificial intelligence can help healthcare providers make sense of all kinds of unstructured data: physician notes, lab reports, medical imaging, and more. This technology enables the extraction of valuable insights from free-text data, improving data accessibility and allowing for better decision-making.

Other Use Cases

There are many other use cases of AI in healthcare:

- Image analysis to detect cancer with AI, fractures, blood clots, etc

- Pattern recognition to detect diseases early on

- Personalized treatment plans based on historical data

- Preventive health insights

- Predicting drug responses, and more

3 Key Concerns of Implementing AI in Healthcare

Combining AI and HIPAA compliance comes with several challenges and ethical considerations, especially considering that we’re dealing with PHI. Let’s see the key concerns in this area.

1. Data Security

Artificial intelligence may require access to sensitive patient data and this creates potential threats. The risk of cyberattacks, data breaches, or unauthorized access can violate patient confidentiality. This is solved by encrypting data both in transit and at rest, but there must also be many other security measures applied.

If you use third-party AI services that require the processing of PHI, those providers must sign a BAA.

2. Privacy Concerns

AI software may inadvertently violate patient privacy by exposing sensitive health information to unauthorized individuals or systems. Ever tried using ChatGPT and forcing it to do something it’s not supposed to? Well, this can happen with healthcare AI too.

Any AI system handling PHI must comply with HIPAA’s Privacy Rule, which sets the standards for the protection of health information. AI must be designed to restrict access to PHI based on the need-to-know principle and provide mechanisms for anonymization or de-identification when possible.

Let’s say we are building a healthcare chatbot. We have to design the interface in such a way that you can’t get someone’s personal information through a Prompt Injection. Ideally, don’t mix the data that the current user should see with the prompts or semantic search used in the chatbot.

3. Patient Consent and Control

Considering that AI collects large amounts of personal health information, patients must be fully informed and how this data will be used and have the ability to control it. This means that there must be a way for AI to get patient consent before obtaining and using their data. If a patient decides to delete their data, this must be available at any time.

10 Strategies and Best Practices for Building HIPAA-Compliant AI Apps

Combining HIPAA and AI in healthcare requires you to follow the industry’s best practices and our team’s time-proven strategies.

1. HIPAA-Compliant User Registration

A HIPAA-compliant registration process requires the software to collect only the minimum necessary information that must be stored securely. This means that you should include:

- Names

- Emails

- Only relevant health information

All data must be protected using AES-256 encryption or alternatives during storage and TLS/SSL protocols during transfer. You must also enable 2FA to protect user accounts.

2. Explicit User Consent for PHI Sharing

HIPAA obliges you to obtain consent from users for sharing their PHI with any AI or LLM provider. This means you must:

- Provide clear & understandable consent forms explaining what PHI will be shared, with whom, and for what purposes

- Ensure that users opt-in (not opt-out) before their data is shared

- Allow users to approve or deny each instance of PHI sharing

- Ensure compliance with HIPAA’s Privacy Rule by keeping the consent process documented

For example, before a patient shares their lab test results with an AI system for analysis, they must get a pop-up with a consent form clearly stating what’s going to happen with their data. The data must only be shared after the patient clicks “I agree”.

3. Business Associate Agreement with AI Providers

If you want to use a third-party AI platform or APIs, you must find one that provides a BAA, as required by HIPAA’s regulations. The only exception is when you’re a covered entity.

As of 2024, the following companies provide BAAs:

Signing a business associate agreement will ensure you make any of these AI services HIPAA-compliant. However, remember to always check whether your specific LLM provider works with BAAs.

4. Data Encryption

According to the updated HIPAA encryption requirements, all data must be:

- Encrypted both at rest and in transit

- Covered by encryption protocols like AES-256 and TLS

- Regularly updated according to industry standards

- Encrypted across all systems: databases, servers, devices, etc

This will help you prevent breaches and ensure compliance.

5. Secure Data Sharing Mechanisms

You must work on developing a secure data sharing mechanism to prevent unauthorized access. To prevent any issues with PHI, you should implement the following:

- Encrypted APIs and secure email systems to share PHI

- Explicit user consent before sharing data with healthcare providers and third parties

- Role-based access controls to restrict PHI access to authorized personnel only

- Use access tokens as one of the most secure authorization methods for data-sharing transactions

A secure mechanism combined with multiple features is usually the best way to protect sensitive data.

6. Continuous Risk Assessments

Continuous risk assessments help you detect potential vulnerabilities and ways to prevent them. You ought to consider the following things when working with AI and HIPAA:

- Regular internal and external security audits

- Use OCR’s Security Risk Assessment tools to evaluate compliance

- Update and improve risk management practices over time

For example, OCR’s tool requires you to answer several questions about your activities. These responses are analyzed and create a final report that helps you understand possible issues.

7. Hire a Compliance Officer

You must have a HIPAA compliance officer who is responsible for overseeing the implementation and maintenance of all security measures. Here are some things a compliance officer does:

- Developing and enforcing compliance programs and policies

- Conducting employee training and updates for new staff

- Performing risk assessments

- Investigating and reporting PHI breaches;

- Updating compliance programs according to new federal and state regulations

- Conducting internal audits

- Acting as the primary point of contact for all HIPAA-related questions

Basically, it’s someone responsible for your organization’s compliance. Hiring one will ensure you avoid all possible penalties and have no issues with PHI.

8. Monitor and Log Access to PHI

Logging access and changes to PHI is one of the best ways to identify threats and track breaches whenever they occur. The best practices in this area include:

- Logging who accesses PHI, what data was accessed, and when

- Setting up real-time monitoring systems to detect unauthorized and suspicious activities

- Encrypting logs and limiting access only to certain roles within the team

- Regularly reviewing logs to find unusual access patterns

If you follow these steps, you’ll be able to quickly find and address breaches. This will help you comply with HIPAA’s Security Rule and mitigate possible charges.

9. Conduct Regular Audits

Regular audits help you get a fresh look at your security measures. Sometimes it’s the only way to find a vulnerability and prevent people from abusing it. You’re expected to conduct internal audits at least annually according to HIPAA. Here are some best practices for them:

- Schedule third-party audits by HIPAA compliance experts to get an objective evaluation

- Perform internal audits at least twice a year to comply with regulations and locate all issues

- Audit data access logs and user activities frequently

- Use audit results to update policies and improve data protection protocols

Although HIPAA compliance can be costly, it’s better to invest in your organization’s compliance and avoid all possible issues in advance.

10. User Education and Training

Educating users and team members is necessary to ensure they know how to maintain privacy and security. Knowledge is power, so you must do everything possible to provide it to all associated people. This means you should implement the following:

- In-app tutorials and guides to explain PHI management, how to create strong passwords, and how to identify security threats like phishing

- Regular reminders and 2FA and suspicious activity

- Accessible privacy policies with clear explanations of data usage

- Regular requirements for password updates to protect accounts

If both users and organizations maintain an active role in protecting sensitive health data, then the risks of breaches and similar issues are significantly minimized.

Sync AI with HIPAA Requirements for Your Product’s Success with MobiDev’s Expertise

MobiDev’s team helped hundreds of clients develop and maintain HIPAA-compliant software since 2009. Our healthcare app development expertise extended to implementing AI into healthcare applications since 2018. We can integrate pre-trained AI models and create custom AI solutions for your business, keeping the final software fully compliant with HIPAA requirement and healthcare data security standards.

Feel free to check our AI healthcare consulting services and book a call to discuss how we can help you implement AI into your healthcare product.