Contents:

Speech has always been the favored mode of communication. A person speaks an average of 15,000 words each day. And in contrast with other forms of communication like writing, speech is simple and easy to use. People feel most comfortable expressing themselves with speech. It removes the need for proper spelling, spellcheck, and any associated issues.

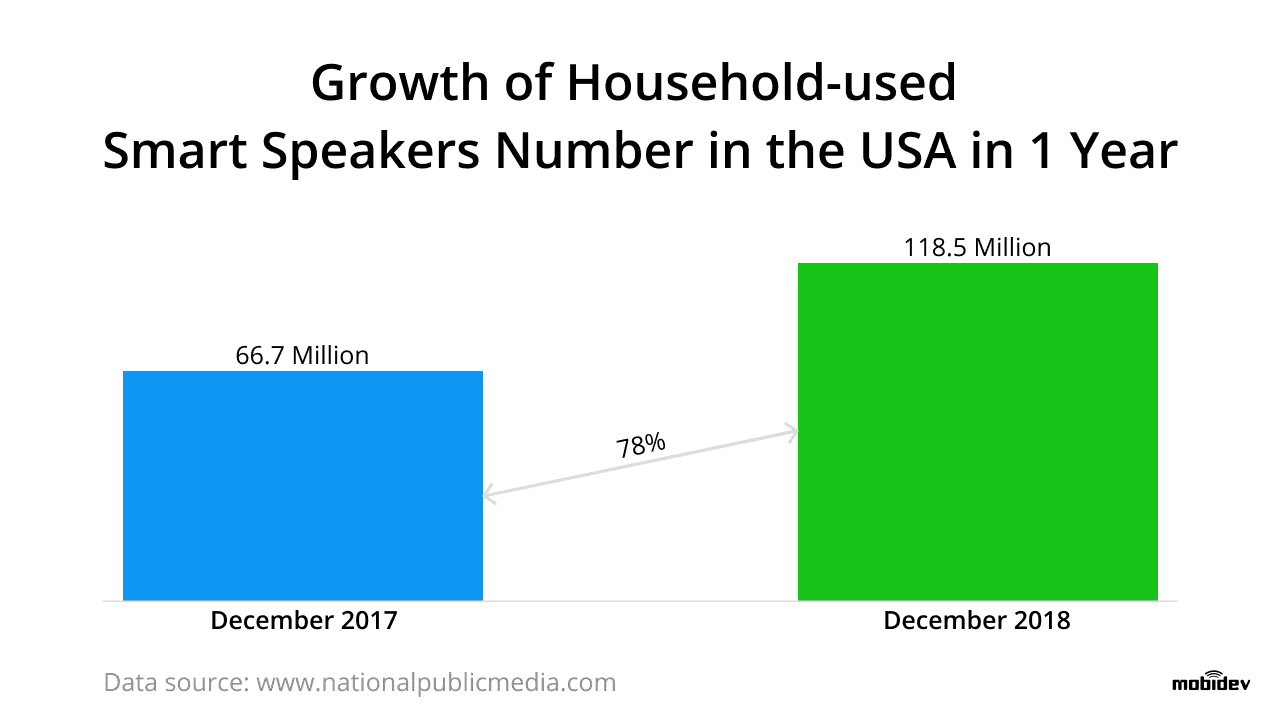

Consequently, more and more people worldwide own smart speakers. NPR and Edison research indicate that 53 million Americans currently own at least one. Furthermore, nearly 25% of Americans are using some form of voice-activated device regularly. Children and other members of households do it as well.

It is predicted, that voice assistants will replace today’s Interactive Voice Response menu. Yet while these devices are making huge inroads into personal use, the enterprise market for speech-driven technologies lags behind. This happens even despite the fact that voice assistants have the potential to transform the business world in the near future.

Voice-enabled technology in 2020: unfolding the potential for the enterprise

When considering voice-enabled solutions at the enterprise level, one instructive area to focus on is the level of adoption by employees in their personal lives. If workers are already using voice assistants ‘off the clock’, it stands to reason they’d be able to take to it quickly if deployed professionally.

According to Globant’s 2018 report, senior employees and decision-makers have largely adopted voice-activated technologies, with around 44% using them daily and nearly 72% using them on a weekly basis in their personal life. Yet despite the fact of their ubiquity in the personal sphere, fewer than 31% of senior employees use any kind of voice-enabled technology at the enterprise level.

It is obvious that there’s some sort of distinction or divide in perception when it comes to voice technology in the workplace versus the same technology being deployed in a home setting. However, it’s not particularly clear why this tech would be deemed to be appropriate in one setting and not the other.

This sort of bifurcated thinking extends to the fact that three quarters of companies have identified voice-enabled technology as a primary initiative, yet few have made any concrete plans to integrate it into their business models.

Foot-dragging businesses often cite the upfront investment necessary to implement a new technology as a limiting factor. This extends both to the cost of the devices and related technology and the manpower lost due to training employees on how to operate the technology.

In many cases, that excuse would be more relevant. However, in the field of voice-enabled technology, the second half especially is a poor excuse. First of all, most potential business users (and customers) are already using this tech in their day-to-day lives. Secondly, voice assistants are notable for their ease of use. This means training will be significantly shorter than in other adoption cases.

Integration of voice-enabled technologies: a view from the tech perspective

Voice assistants rely on Machine Learning technologies like Natural Language Processing (NLP). How do they work? It all begins with some kind of trigger or start button. This trigger may take the form of a concrete button for the user to press. This informs the system that speech-based data will be incoming. In the modern voice-enabled solutions the well-known phrases ‘Okay, Google’, ‘Alexa’, and ‘Hey, Siri’ work as a trigger.

Going beyond generic systems, voice-enabled tech can offer specific voice recognition using powerful neural networks and artificial intelligence. Those types of solutions frequently require involvement of cloud computing.

Once a voice stream is captured by a device, that speech is translated into text. The algorithm is then able to query its business rules. This helps to determine which of its known skill invocation words may match the input speech.

Assuming it finds a match, the algorithm will execute the associated task. This frequently involves communicating instructions to other connected devices, appliances and electronic systems. For example, many smart devices allow users to turn off the light. The device parses the speech, recognizes the skill invocation phrase, and sends the signal to an IoT cloud where it is computed and sent back to the smart device with a command to cut off the light power.

A voice assistant device can operate under simple one-step commands or via complex, interconnected logic, which may involve numerous voice instructions coming from multiple sources. In the end, everything is reduced to inputs and outputs, with the programmed outcomes dictated beforehand.

As a result, a voice-enabled module can easily be integrated with various hardware. Once the speech has been translated to text, the device runs an algorithm pre-determined by developer. It makes possible to add Alexa Skills to nearly any pre-existing software solution.

The challenge here comes from two areas: adjustment of the hardware to the integrated voice-enabled module and proper setting of firmware – microphone and speakers.

Voice assistant case study: integration of Alexa Skills with a staff management system

Using a Smart Mirror as an instance, Michael Lytvynenko, Python & IoT Developer at MobiDev, demonstrates the approaches one might take, as well as the possible areas of concern, when building a voice-enabled solution.

It’s possible to build a custom hardware/software suite using existing voice recognition solutions, involving full design and client/server architecture. This might be the route you’d choose if you require a significantly customized product.

Alternatively, the Alexa voice assistant can expand software capabilities. Alexa Skills can be added to a pre-existing software. It offers a suite of predefined voice commands (for instance, ‘Alexa, what’s the weather like now?’). You also have the ability to create new voice commands depending on the needs of a particular application. Once you’ve created Alexa Skills, you can share them with the Alexa Skills Store, passing them on to users around the world. For e-commerce it means that people with the installed Alexa Skills on their devices may perform voice-enabled purchases of products or services.

Below you can find a use case which showcases how it’s possible to integrate Alexa with staff management software to manage employee data and internal events.

With integration of Alexa Skills, employees are able to use custom voice commands at strong recognition accuracy within a quick timeline.

The future of voice assistants in the enterprise

Recently, Google CEO Sundar Pichai announced that around 20% of current Google queries through Android devices and apps are voice searches. Already, voice technology is playing a large role in our daily digital lives and becomes one of the hottest trends.

Businesses are finally starting to get onboard with the notion of adopting the technology. Nearly 90% of companies report an intent to invest in voice-enabled customer-facing solutions.

Optimism runs high, with close to 90% of managers stating the voice-activated devices can offer a competitive edge. More than half of them believe this technology can deliver improvements in operations. Hands-free ERP system management and voice-enabled inventory reports are only a few of the possible use cases.

Voice recognition powered by neural networks

Aleksander Solonskyi, Data Science Engineer at MobiDev, gives his thoughts on what lies at the heart of voice technology.

The core of what makes voice assistants work is the transfer of spoken words into text data. In order to accomplish this, voice technology uses neural networks to parse and classify speech.

Successfully transferring speech to text has three primary aspects. The first is the level of accuracy in recognition. The second is the length of the phrases that must be recognized. The third is the number of phrases it will need to identify and differentiate from. The more complicated or exacting a solution must be on those three aspects, the more data it will require, which translates to more effort and money invested in order to make it work.

When it comes to existing phrases, we already possess a number of libraries and datasets which can greatly aid in accuracy. For more exotic new phrases, additional training may be required.

The next level in speech recognition is understanding not only of spoken words, but also of intonations. Mastering intonations requires multiple networks joined together in a branching structure.

What are the constraints of using voice assistants for the enterprise?

While the benefits of AI assistance technology are numerous and obvious, the field hasn’t fully matured in all respects. The following constraints are slowing down adoption in certain areas.

Protection of sensitive data

Security is likely the primary issue faced by voice-activated technologies. While most of personal applications are fairly trivial, enterprise applications will be more technical and will be used within more analytical frameworks. Integration with a 3rd-party system often narrows down voice assistant use cases to ones which operate only with non-highly secured data.

Language support

Among more than 7,000 languages spoken worldwide, 200 are reported to be spoken by more than 1 million people. 23 languages are spoken by more than half the world’s entire population. Considering this, the coverage of modern voice assistants is rather limited.

For example, Google Assistant provides developers with Actions in 19 languages. Amazon Alexa recognizes only 6 languages across 15 regions. Microsoft Cortana supports 8 languages and 15 locales. Apple Siri fares better, covering 21 languages in 36 countries, with dozens of dialects to boot.

Lack of proper localization may cause voice assistants to miss cultural specifics and norms. For example, a test run by Vocalize.ai in September 2018 found that Amazon Echo and Apple HomePod recognized only 78% of words in Chinese, as opposed to a more confident 94% of words in English.

User experience

Unfortunately, users of voice-activated devices are not currently exploring all the capabilities available to them. Nearly half of voice assistant users are sticking with a narrow range of options, despite having around 80,000 choices available on the Alexa network. These commands are simple and readily accessible, but at present most users aren’t experimenting with the full range of available options.

Should one invest in voice assistance technologies?

At this point in time, inertia is the biggest problem facing voice assistant technology. People simply aren’t yet invested in breaking their patterns of manually controlling their devices instead of using speech. This is doubly the case in the business world.

While its progress isn’t as rapid as some predicted, voice-enabled solutions are gradually pervading our personal and professional lives. It seems that passing over that psychological hurdle and becoming comfortable communicating with our machines via voice commands is all that stands between great progress and gains in ease of use and efficiency.

Right now, voice-enabled technology should be regarded as more of a mid-term investment. Adoption will require some patience and effort, but the business gains it promises will be eventually rewarding.