Among all augmented reality platforms, ARKit stands above the rest in popularity and performance. Apple’s tight-knit ecosystem, standardized hardware, and high performance of ARKit for augmented reality applications make it highly desirable for AR developers. Choosing to make an app with the native iOS framework unlocks the potential for more advanced AR products.

With extensive experience in building AR-powered apps, we’ve created an ARKit development guide that will help you fill some gaps in the understanding of the specifics of ARKit projects.

ARKit Features for Providing Immersive Experience on iOS

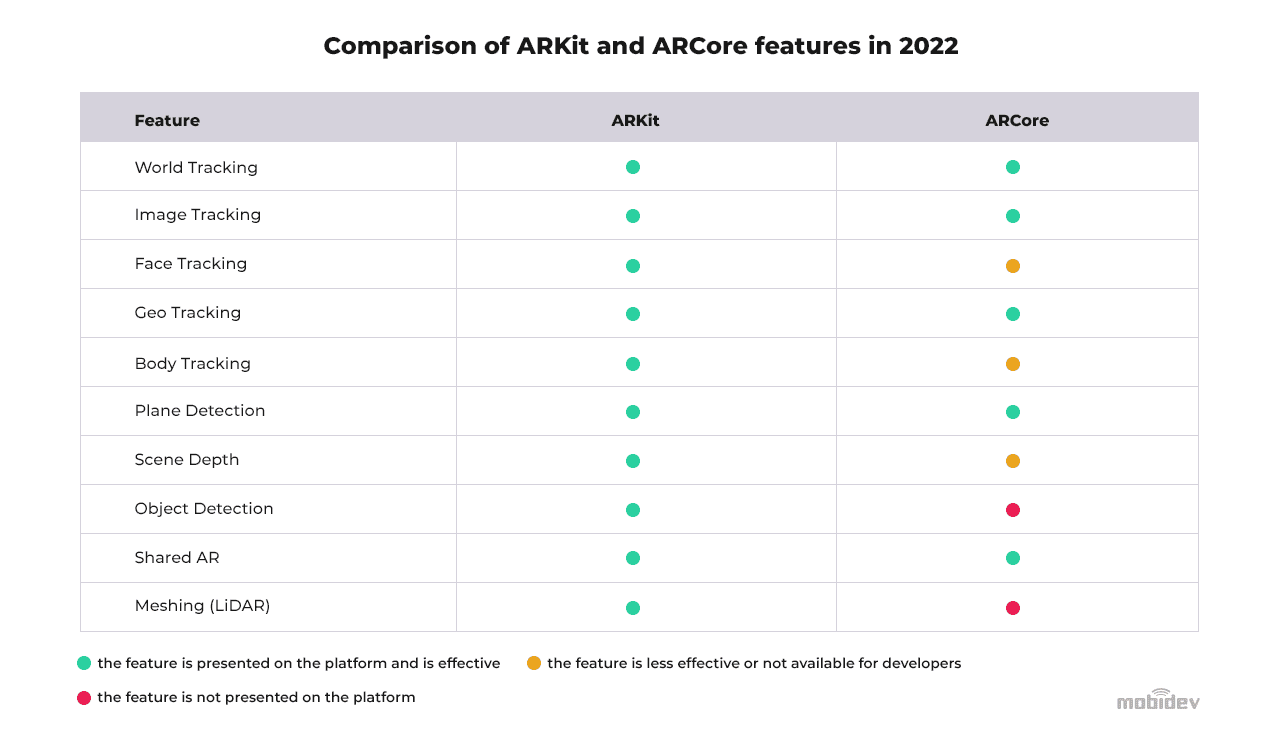

The most important aspect of ARKit is that it is the only framework that unlocks the full potential of iPhone and iPad hardware for augmented reality experiences. Some of the greatest features that ARKit has to offer are object and scene recognition, meshing, geographic tracking, face tracking, and more.

ARKit is often compared to its Android competitor, ARCore. Just like ARKit, ARCore can make it possible to utilize the full potential of an Android device for augmented reality. However, Android devices are far more diverse than Apple, having a variety of different specifications and levels of performance. This makes ARKit’s performance much more predictable and consistent.

If you are only targeting iOS or if you are specifically looking to utilize the full power of Apple devices for AR experiences, ARKit is the way to go. Also, if you are going to create two native apps for iOS and Android, we recommend starting with iOS development because it provides more convenient tools for developers than Android, which means a cost-effective option for checking the feasibility of your idea. Also, it covers more potential users.

How to Create Augmented Reality with ARKit

Augmented reality experiences on iOS devices are accomplished via three steps: tracking, scene understanding, and rendering. AR applications require input from sensors like cameras, accelerometers, and gyroscopes. This data then must be processed to determine the camera’s motion in the real world. Once this is complete, 3D virtual objects can be rendered on top of the real world image and displayed to the user.

More advanced AR applications use depth sensors to understand the layout of the scene. This allows for a better sense of scale and can even support features like occlusion, where objects in the real world exist in front of virtual objects in AR.

However, for most AR applications, the best environment is a well-textured and lit area. A flat surface works best for visual odometry, and a static scene for motion odometry. When the environment does not meet these conditions, ARKit provides users with information on the tracking state. The three possible states are: not available, normal, and limited.

ARKit has plenty of features beyond simply displaying objects on flat surfaces. For instance, ARKit 5 supports vertical surfaces, image recognition, and objects placed in geographic space. In order to see the capabilities of ARKit in action, let’s take a look at several use cases that can help your business carve an innovative path to success and corner the market with ARKit development.

Case Study #1: Outdoor and Indoor AR Navigation

GPS is the king of outdoor navigation. However, it suffers from decreased accuracy when indoors and when obstructed by terrain and tall buildings. However, expanding technologies like Bluetooth beacons, Wi-Fi RTT, and Ultra-Wideband (UWB) are filling the gap. Augmented reality navigation can help users get around using GPS and these other technologies for on-screen directions in the form of virtual elements drawn over the real world.

With AR indoor navigation powered by alternative positioning technologies, navigating shopping malls, convention centers, and airport terminals can become much easier. It can also be used in business contexts in stores and distribution centers to help workers find items and packages that they need.

Using Innovative Technologies For Indoor Positioning

If GPS isn’t available, alternative positioning technologies like Bluetooth, Wi-Fi RTT, and UWB can help the device acquire its precise location. After that, ARKit and the rendering engine take care of the rest.

An often-discussed solution in such cases is an IoT beacon network, but our research has shown that the capabilities of beacons for precise AR navigation are quite limited. In our experiment, the beacon navigation accuracy was 3-5 meters, which would be okay for 2D navigation, but AR requires higher accuracy, preferably up to 1 meter, or even better.

There are various challenges to this approach. The beacon network must be carefully positioned to avoid interference and obstruction. For example, UWB signals cannot pass through walls, people, and plants. Also, it can be expensive and is only useful in certain contexts.

Given that beacons don’t allow us to create accurate indoor AR navigation, let’s look at other options that can help us achieve this goal.

Image Recognition and Machine Vision

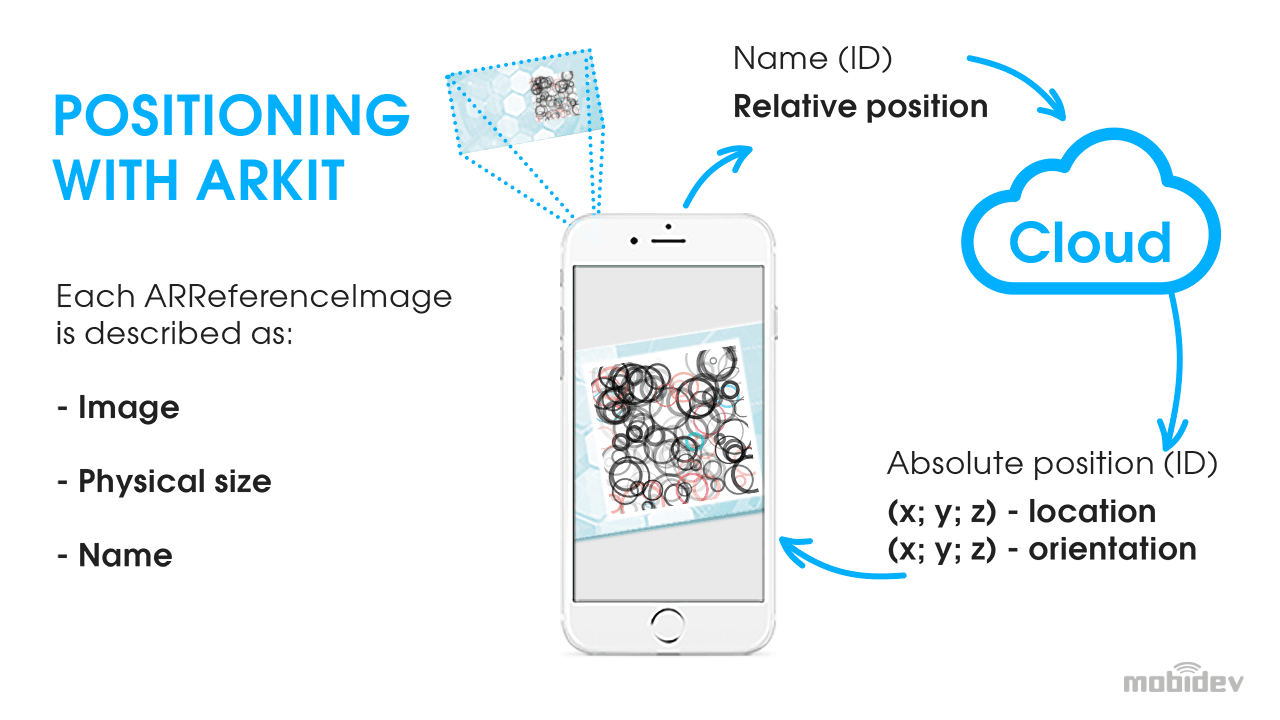

Another way to provide precise indoor positioning is to use visual markers as a frame of reference. This works similarly to ARKit Location Anchors, which search for matching images in the Look Around database to find the device’s geographic position. However, instead of searching the Apple Maps database, we create our own database of visual markers. These could be QR codes or any other kind of unique code on any surface. When scanned, these markers communicate location metadata to the device.

With the 3D coordinates obtained from the marker, mobile devices can initiate AR navigation experiences to help users find their way through buildings. This eliminates the need for expensive IoT beacon networks. The only required maintenance would be ensuring that the markers are clean and unobstructed.

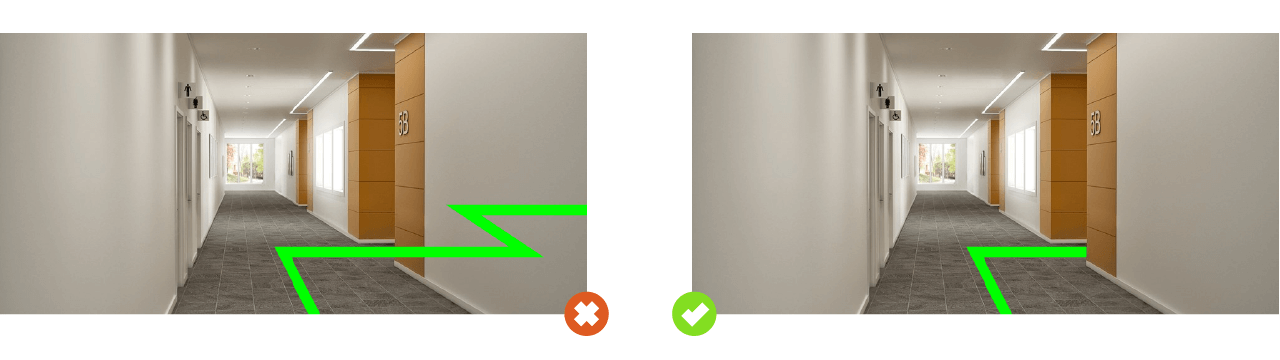

Rendering Paths with Augmented Reality: The Importance of Occlusion

Drawing content on the screen is easy. Making it blend seamlessly with the real world is much harder. For indoor navigation, a drawn line on the screen is more helpful if it becomes occluded (obstructed) by a wall or door when it curves around a corner. Without occlusion, users can become confused about where the on-screen directions are taking them.

Some solutions include simply drawing an arrow on a screen instead of a full line. However, this isn’t as convenient. Another option is to reduce the render distance. This prevents the line from being drawn too far away and makes it easier to avoid having to account for occlusion.

In our case study, we decided to take a different approach by creating a very low-polygon 3D model of the building. This allowed us to overlay the model with the real world through the use of the user’s camera and allows us to better support occlusion. It requires more time and effort in development, but it provides a much more immersive and easier-to-understand indoor navigation experience with ARKit. We believe this is one of the most cost-effective options for AR indoor navigation that doesn’t sacrifice quality.

Check our demo of ARKit navigation below to see AR indoor navigation in action!

Using Location Anchors for Outdoor AR Navigation with ARKit

Outdoor AR navigation is generally easier to accomplish than indoor navigation due to more reliable technologies being available, such as GPS. However, image recognition-based VPS (Visual Positioning System) systems can make navigation even more accurate. This makes it much easier to display virtual objects for AR experiences like on-screen directions.

There are some drawbacks to this approach though. For example, rural areas may have street view images but not enough artificial features like tall buildings to use as references. This means that it can be harder to provide on-screen directions. Areas without any images in the database will make this method useless. Because of these limitations, image-based positioning is only useful in more urban areas.

Location Anchors allow developers to ‘pin’ virtual objects in geographic space. Users can then see these virtual objects on their screen after first obtaining their position from image recognition. However, there are some drawbacks to mention for these urban areas. Apple Look Around image data isn’t yet available for all cities. A potential alternative might be a network of beacons or GPS. A beacon network would be far more useful for smaller-scale outdoor settings. For example, beacons could be spread through a factory area to find the right workshop.

Case Study #2: AR Measuring Tools

Thanks to the robust sensors used to provide augmented reality, it’s possible to take accurate measurements of environments without ever pulling out a tape measure. This led to the development of Apple’s RoomPlan framework which uses an iPhone or iPad LiDAR scanner to create 3D floor plans of building interiors. This includes room dimensions and can even recognize types of furniture.

We worked on a similar project creating AR home renovation application for a client in Oregon. This allows users to change wall colors, and add new furniture and other objects. However, in some cases, users may want to use more than one material on the same surface. This requires a bit more work to get around. We chose to develop a custom algorithm with a Graph data structure to make it work as expected. This allows users to carve up any surface into any number of zones and choose a different material for each zone.

Even though in this case we created a custom solution, the release of RoomPlan today can be used for similar projects to make it easier for developers. RoomPlan enables developers to efficiently output room scans in USD or USDZ file formats. These include room dimensions and objects the room contains. These file formats can then be imported into other software for further processing and development. Room scanning works extremely quickly, allowing users to create scans of rooms in seconds.

Case Study #3: Face-Based Augmented Reality

A major advantage of ARKit over ARCore is hardware compatibility and stability. The TrueDepth cameras of the iPhone since the iPhone X have been capable of providing consistent quality in face-based AR. Just like other kinds of AR, this involves tracking, scene understanding, and rendering.

ARKit processes data to result in tracking data, face mesh, and blend shapes. Tracking data gives developers a better sense of how to display content as the subject moves their face. Face mesh is the geometry of the face. Blend shapes are set of the parameters of a detected face in terms of the movements of specific facial features. Each parameter has the corresponding value, which is a floating point number indicating the current position of that feature relative to its neutral configuration, ranging from 0.0 (neutral) to 1.0 (maximum movement).

Face-based AR is used for AR face masks and filters to improve user experience and boost engagement. Virtual try-on solutions are another popular option for consumers and businesses. Face-based AR can help consumers try on products at home like sunglasses and makeup without having to leave the house. Brands of clothing, footwear, accessories and cosmetics have been implementing this top retail trend for a long time, and with the growth of interest in the metaverses, it is becoming even more popular.

Non-AR Applications of Face-Based ARKit Technologies

It’s important to remember that because of this advanced level of fidelity with face tracking, ARKit is not necessarily exclusively useful for AR experiences. It could be used simply for face-tracking applications without augmented reality.

For instance, tracking weight loss is possible through facial measurements. As one gains and loses weight, their face changes width. iPhone True Depth cameras can detect these variations and measure them over time with an app. Also, face-based AR can be useful for controlling on-screen elements through blend shapes and eye-motions. With a little imagination, face-based AR opens up a lot of possibilities with ARKit solutions.

Check out the MobiDev demo to find out more about gaze tracking capabilities and use cases.

Another non-AR related use of face-based ARKit technologies is driver’s safety. WakeUp app developed by MobiDev can illustrate it. It is an application that can track and act upon changes in the position of a driver’s head and eyes in space. This iOS app warns users when it detects distracted or drowsy driving tendencies by making a sound to get their attention. ARKit combines two sources of data for this purpose:

- Front-facing camera: facial recognition.

- TrueDepth camera: provides more advanced face tracking.

Together, these two sensors allow ARKit to detect facial feature points and eyelid closures. When data reaches certain thresholds, the app can warn the user with a high level of accuracy.

Case Study:

WakeUp: iOS Solution Preventing Drowsy DrivingFuture of ARKit Development

ARKit has enormous potential thanks to its indoor navigation, facial recognition, room scanning features, and more. The number of active ARKit devices grows every year and reached about 1,368 million in 2022 compared to 1,250 million in 2021, according to Artillery Intelligence. This demonstrates the growing interest of businesses in using ARKit to provide immersive experiences for customers.

Although the technology involved in augmented reality development with ARKit is important, the most critical component of any successful ARKit project is an expert development team. Without clear, innovative objectives, businesses can’t create unique and valuable solutions to problems. Only experienced ARKit developers can turn plans into performant software.

Why Choose MobiDev for ARKit App Development

If you’re ready to make your idea a reality, our AR development team can help you find the solutions you need to gain an edge in the market.

Having an extensive experience with innovative technologies, our augmented reality developers know how to overcome the limits of existing AR frameworks to create more effective solutions. Combining AR with advanced artificial intelligence and machine learning algorithms can help you provide more accurate and realistic AR experiences, improve app performance and increase customer satisfaction.

Rely on our cross-domain multi-platform AR expertise to create an exciting solution that brings real value to your business.